Raja Koduri: 'Game Developers Have More Juice Than They Take Advantage Of'

Posted on October 22, 2016

Part 1 of our interview with AMD's RTG SVP & Chief Architect went live earlier this week, where Raja Koduri talked about shader intrinsic functions that eliminate abstraction layers between hardware and software. In this second and final part of our discussion, we continue on the subject of hardware advancements and limitations of Moore's law, the burden on software to optimize performance to meet hardware capabilities, and GPUOpen.

The conversation started with GPUOpen and new, low-level APIs – DirectX 12 and Vulkan, mainly – which were a key point of discussion during our recent Battlefield 1 benchmark. Koduri emphasized that these low-overhead APIs kick-started an internal effort to open the black box that is the GPU, and begin the process of removing “black magic” (read: abstraction layers) from the game-to-GPU pipeline. The effort was spearheaded by Mantle, now subsumed by Vulkan, and has continued through GPUOpen.

What is GPUOpen?

We figured it'd make sense to recap the very basics, since GPUOpen has only been under the spotlight since around the time of the Polaris launch. This effort spawned because AMD saw the imminent arrival of Vulkan and DirectX 12 and realized that optimization would heavily shift to development houses. The removal of abstraction layers helps to directly deliver code to the GPU (minus a couple layers still for compilers), but that doesn't mean it instantly runs better. It's not a free gain to just “toggle” DirectX 12, as we've talked about in the past.

Now, with the driver becoming less critical to game performance (but still critical), developers must work to pick up where the IHVs left off. ISVs by-and-large have less experience working with memory management – now handled engine-side with Dx12 and Vulkan – and shader assignment, CU reservation and allocation, and on-die component usage. These types of performance optimizations normally happen at the hands of the IHV (that's why drivers are so important), and that also means nVidia, AMD, and Intel have tremendous internal experience optimizing game titles.

This is often done by hand, too. To improve a game's performance on AMD's GCN architecture or nVidia's architectures, the IHV will often manually tune that game's performance profile within the drivers, to the point that certain effects in game may be manually tasked to execute on specific units of the GPU. With Dx12 and Vulkan, a lot of that control is handed to developers.

So, again, low-level APIs are not a “free” performance boost.

This bleeds into GPUOpen. AMD wants to assist developers in taking (some of) the reins for game-GPU optimization. Asked for an introduction to GPUOpen, Koduri told us:

“To get the best performance out of the GPU, the best practices, the best techniques to render shadows, do lighting, draw trees, whatever – there are different ways to do that. But what is the best way to do that? We figured out that that value add kind of moves into engines. It's basically in the game engines, and the games themselves, have to figure out [optimal techniques with new APIs]. They have to do more heavy lifting, figuring out what's the most optimal thing to do.

“The drivers themselves have become very thin. I can't do something super special inside the driver to work around a game's inefficiency and make it better. And we used to do that in Dx11 and before, where when we focus on a particular game and we find that the game isn't doing the most efficient thing for our hardware, we used to have application profiles for each application. You could exactly draw the same thing if you change the particular shaders that they have to something else. We did manual optimization in the drivers. With these low overhead APIs, we can't actually – we don't touch anything, it's just the API, whatever the game passes, it goes to the hardware. There's nothing that we do.

“We have a lot of knowledge in optimization inside AMD, and so do our competitors, so how do we get all of that knowledge easily accessible to the game developers? We have lots of interesting libraries and tools inside AMD. Let's make it accessible to everyone. Let's invite developers to contribute as well, and build this ecosystem of libraries, middleware, tools, and all, that are completely open and would work on not just AMD hardware, but on other people's hardware. The goal is to make every game and every VR experience get the best out of the hardware. We started this portal with that vision and goal, and we had a huge collection of libraries that we [put out]. It's got good traction. It also became a good portal for developers to share best practices. Recently we had nice blogs [...] sharing their techniques and all. More often than not, these blogs have links to source code as well.”

The portal in reference is here, for anyone who'd like to check that out.

From here, our discussion moved on to software performing the bulk of the work with regard to optimization and performance. If we had perfect compression, perfect software practices, you'd be able to run high-fidelity games on much lower-end GPUs and CPUs. None of it's perfect, obviously, so there has to be more communication between ISVs and IHVs on creating high-performing engines and game platforms.

Koduri continued:

“Software is more than 50%, if not higher, portion of the performance that you see on a system. The GPU – yeah, we have transistors that kind of wiggle around and do five-and-a-half TFLOPS or something, but if they're not appropriately scheduled and appropriately used by these software techniques, then they're wasted. So that was the intent, and also tools that make developers productive in debugging their quality issues or performance issues.

“What we noticed is that we collectively, as an industry, haven't done a good job at providing a consistent set of tools. Frankly, if I am a developer, I don't want to be learning one set of tools for nVidia, one set of tools for AMD, another set of tools for Intel, another set of tools when I get onto the game console. What ends up happening is they don't use anybody's tools. They end up relying on print+f debugging. That's not a good place to be.

“That was one of our goals with GPUOpen – let me put out our tools as well, with full source code. We want to encourage people to help us get those tools working on other hardware as well. Now, we're not married to our tools. We are actually quite open to using other people's tools as well. If some other company wants to contribute a tool and put it out there in the open, we'd be more than happy to pitch-in and get those tools working. [...] Where we are going to be in 40 years is amazing, but if you just use today's software and hardware, the performance we need to be able to support a 16k x 16k headset at 120Hz is one million times more to get to photo realism. You're not going to get a million times more with Moore's law.”

“We need to get there before I am dead or retired. We're not going to get there by doing what we're doing.”

Koduri passionately explained that software frameworks need an overhaul to speed-up the performance capabilities of hardware, using VR as a launching point to argue in favor of that performance demand:

“My goal is: we need to get there before I am dead or retired, and we're not going to get there by doing what we're doing, because the entire software framework needs to change. The game developers need to be one thousand times more productive than they are today for them to give us the one million X [gain]. Hardware will move forward, but it may be 4x faster in 40 years, or maybe 8x faster in different segments. But not a million times faster. But software can make it a million x faster. We've seen the amount of wasted computation frame-to-frame in a scene is quite high. But we need different ways of thinking to generate these pixels. This whole VR thing has parked a whole bunch of fundamental research back on graphics.

“You see frame-to-frame – each frame is so complex, and at 60Hz, regenerating the frame again, we say, 'what is the difference between this frame and the next frame? Hmm, this 100 pixels, but I redrew the entire thing.'”

Software Knows the Context, and Game Developers Aren't Leveraging It

Koduri's explanation continued into software limitations and contextualization of render tasks. The point that Koduri is making, if it's not already clear, is that the performance gains desired by his team are not feasible strictly from a hardware side. AMD or nVidia could architect new GPUs at the pace they're going now, and that will not create – in Koduri's eyes – a “one million X” increase in processing capabilities by 2056.

As for why Koduri wants that specific gain, it has to do with ultra-realistic VR (or gaming in general), where we are confronted by photo-realism. This could be done with photogrammetry, or with techniques that aren't even invented yet, but the idea is “photo-realism” in 16,000 x 16,000 resolution spaces. That's Koduri's goal, and the RTG Chief Architect wants it in his lifetime.

“There's so much correlation between neighboring pixels or texels, so yeah, DCC [Delta Color Compression] is one of the things [that helps]. But there are things like that that I can do, or hardware can do, but imagine the kind of things that the software can do because they know the context of the scene. They know what's changing, what's not changing. They know what can be reused, what can't be reused. I can do certain things for them, but I'll be guessing. They don't need to guess. They know what's going to happen next.

“One of the classic examples is that – you see in many games on low-level hardware when you have a big explosion, everything stutters. I say, I don't know that there's an explosion coming in the hardware, but the game developer knows that there's an explosion coming in the hardware. Say they have a mechanism to hint me in the hardware or the drivers, I can boost to the max clock if I have some clock headroom just for two frames or three frames or four frames. That won't make me go beyond my thermal budget or something. Things like that, when the game dev starts thinking in those terms, they have more juice available in hardware than they take advantage of.

“For most of my game, I don't need to be running at DPM 7. When I need it, like this explosion, I need you [hardware] to be there and not all subscribed already and not running at peak temperatures. That's one example when developers start thinking performance.”

The Boltzmann Initiative

We asked about CUDA code execution on AMD hardware, and if there are means to make this possible in an efficient fashion. Our team uses software like Adobe Premiere, Blender, and 3D render/animation software for video production. As part of this process, we must optimize our hardware configurations to work best with whatever OpenCL or CUDA acceleration the software supports. Oftentimes, we need a little bit of both. Using an AMD device is generally not beneficial in these scenarios, as you'd have to rely on non-accelerated (or poorly accelerated) rendering. Koduri noted that this is being addressed:

“The Boltzmann Initiative is related to our GPU compute. The industry has a long history of GPU Compute APIs. OpenCL is the standard for computing, then there are proprietary initiatives like CUDA and others. The holy grail for GPU computing always, if you talk to the programmers, is that they'd like GPUs to be programmed directly in all of the current tool chains that they use. C, C++, Python, Perl, whatever languages they use to do their daily work. That's really what they want to do. 'Hey, I have some compute intensive tasks that can benefit from a GPU. I should be able to use my language, not figure out how to learn some new language like OpenCL or something like that.'

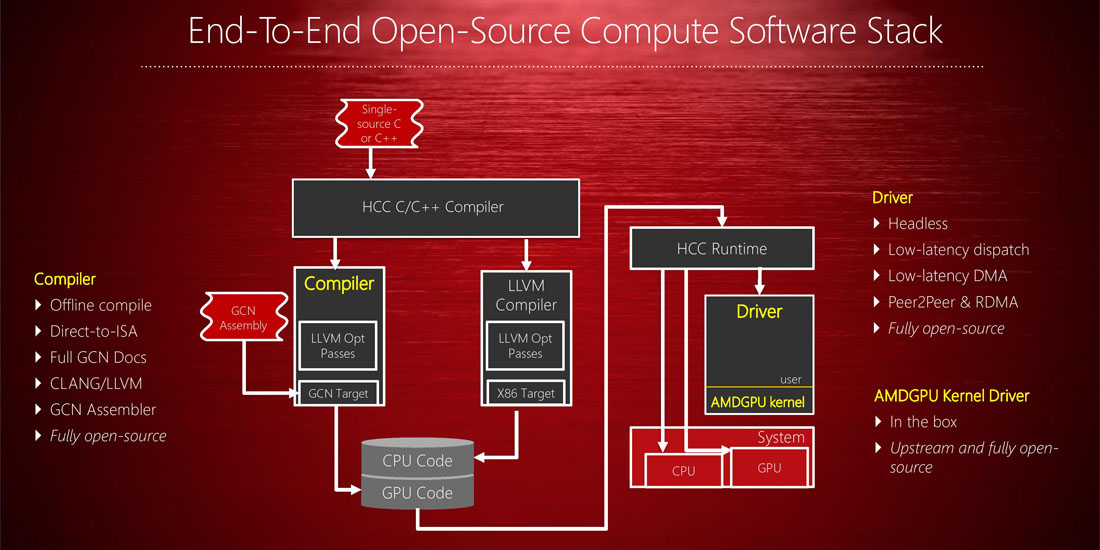

“[...] As we were working toward that goal, what we discovered was the architecture of OpenCL and graphics APIs doesn't suit well for supporting all these random languages and scripting languages and all. And second, most of this successful language framework, whether it's Perl, Python, and all, are all based on open source frameworks. It is really hard to integrate a closed runtime framework like graphics drivers into all the stacks. That was the genesis for the Boltzmann Initiative, where we said, 'what if we do a compute stack from top-to-bottom, including the kernel mode drivers, [all] completely open, and [structure the stack so] that these frameworks can integrate to any level of abstraction they choose.

“Some frameworks want to go all the way to machine, directly to machine code. Boltzmann allows that. Some frameworks want to stay one level above – like Vulkan level. Some frameworks want to go to some high level language like OpenCL or C++ extensions, or some other libraries that we have sitting on top of this. We allow that. Boltzmann is the first open compute stack for GPUs. It is one of the key steps we took in my goal of opening up the GPU. The GPU is a black box for 20 years now. A black box abstracted by very thick APIs, very thick runtimes, very thick voodoo magic. We are trying to get the voodoo magic out of the GPU software stack, and we believe there – there is still voodoo magic in transistors and how we assemble them, and in game engines, compute engines, libraries, the middleware. Voodoo magic in the driver middle-layers is not beneficial to anybody, because it's preventing the widepsread adoption of GPUs.”

We also spoke with Koduri about texture compression and his work with DxTC, now 19 years in the past. This discussion led to the Radeon Pro SSG, which Koduri thinks is a potential future for consumer GPUs.

“[DXTC] was 19 years ago. Oh my god [laughs]. DxTC was one of the first standardized compression formats, and it's still kind of supported in almost all hardware. Even on mobile. Compression has evolved since, but the fundamental construct for GPU-based texture compression [on] hardware hasn't evolved that radically. It improved in quality, we got more interesting data types the compression is supporting. The first instantiation of DxTC was good for RGB texture maps, but then we got really interesting other data types like normal maps, light maps, distance maps – they changed a lot. We evolved. ATi and AMD contributed to the evolution of DxTC to next generation formats. All of these steps are pretty evolutionary from a compression standpoint.

“Every developer's dream would be, 'hey, if you can sample a texture straight out of JPEG which has much higher compression rates,' and you could even get 1000:1 compression depending on the content. There's variable compression rate stuff. If you kind of understand hardware mechanics for compression and decompression and all, that sounds good on paper but it costs... just the decompression hardware to go at the rate at which the DxTC compressor goes, it'll be a chip that's bigger than the entire GPU just to do the decompression. The reason why we don't do it is not because we don't know how – we can, it just kind of costs an arm and a leg to do that. I think there is a happier medium in collaboration with the developers that is in between – between giving you the benefits of JPEG-like compression and the speed of DXT-class algorithms.

“How do you connect them in a better way so that all my assets from my authoring time to your download time to coming onto the computer to into the GPU memory can stay compressed all the way to the GPU memory? The whole experience speeds up. Hardware got so much faster, but man, the game loading time still is the same from the last 15 years. I mean, if you have an SSD or something... but loading time is – with [an] increasingly attention-deficit population, on devices like [indicates phone] where things start instantly, I think games need to start instantly. I think there is a happy medium there. [The] industry [working] together [to] solve it in the next 40 years – 'how do we make these terabyte, triple-A game titles just load instantly?

“I'm glad you brought up SSG, because that's one of the driving factors. Even though we positioned it for professional graphics and others, you can see the path of the usefulness for gaming users and other stuff.”

Find our first part of the Raja Koduri interview here. You can support our traveling interviews by backing us on Patreon, or by simply following us on YouTube and Twitter.

Editorial: Steve “Lelldorianx” Burke

Video: Keegan “HornetSting” Gallick