Battlefield 1 CPU Benchmark (Dx11 & Dx12) - i7 vs. i5, i3, FX-8370, X4 845

Posted on

This benchmark took a while to complete. We first started benchmarking CPUs with Battlefield 1 just after our GPU content was published, but ran into questions that took some back-and-forth with AMD to sort out. Some of that conversation will be recapped here.

Our Battlefield 1 CPU benchmark is finally complete. We tested most of the active Skylake suite (i7-6700K down to i3-6300), the FX-8370, -8320E, and some Athlon CPUs. Unfortunately, we ran out of activations before getting to Haswell or last-gen CPUs, but those may be visited at some point in the future. Our next goal is to look into the impact of memory speed on BF1 performance, or determine if there is any at all.

Back on track, though: Today's feature piece is to determine at what point a CPU will begin bottlenecking performance elsewhere in the system when playing Battlefield 1. Our previous two content pieces related to Battlefield 1 are linked below:

The video form of this article is embedded below. You may continue reading if preferred or for additional depth not provided in the video.

Test Methodology

We first detailed our Battlefield 1 testing methodology in the GPU benchmark article. Much of that has been redeployed here, with the exception being a focus on CPUs. Our Battlefield 1 CPU benchmarking leveraged three different types of tests for final validation, one of which partially mirrors AMD's internal BF1/CPU test methodology as a means to further verify our results against theirs.

First, some re-printed text from our BF1 GPU test methodology, then we'll define the additions:

The above video shows some of our gameplay while learning about Battlefield 1's settings. This includes our benchmark course (a simple walk through Avanti Savoia - Mission 1), our testing in Argonne Forest to get a quick look at FPS during 64-player multiplayer matches, and a brief tab through the graphics settings.

We tested using our GPU test bench, detailed in the table below. Our thanks to supporting hardware vendors for supplying some of the test components.

Game settings were manually controlled for the DUT. All games were run at presets defined in their respective charts. Our test courses are all manually conducted. In the case of our bulk data below, the same, easily repeatable test was conducted a minimum of eight times per device, per setting, per API. This ensures data integrity and helps to eliminate potential outliers. In the event of a performance anomaly, we conduct additional test passes until we understand what's going on. In NVIDIA's control panel, we disable G-Sync for testing (and disable FreeSync for AMD, where relevant). Note that these results were all done with the newest drivers, including the newest game patches, and may not be comparable to previous test results. Also note that tests between reviewers should not necessarily be directly compared as testing methodology may be different. Just the measurement tool alone can have major impact.

We execute our tests with PresentMon via command line, a tool built by Microsoft and Intel to hook into the operating system and accurately fetch framerate and frametime data. Our team has built a custom, in-house Python script to extract average FPS, 1% low FPS, and 0.1% low FPS data (effectively 99/99.9 percentile metrics). The test pass is executed for 30 seconds per repetition, with a minimum of 3 repetitions. This ensures an easily replicated test course for accurate results between cards, vendors, and settings. You may learn about our 1% low and 0.1% low testing methodology here:

Or in text form here.

Windows 10-64 Anniversary Edition was used for the OS.

Partner cards were used where available and tested for out-of-box performance. Frequencies listed are advertised clock-rates. We tested both DirectX 11 and DirectX 12.

Please note that we use onPresent to measure framerate and frametimes. Reviewers must make a decision whether to use onPresent or onDisplay when testing with PresentMon. Neither is necessarily correct or incorrect, it just comes down to the type of data the reviewer wants to work with and analyze. For us, we look at frames on the Present. Some folks may use onDisplay, which would produce different results (particularly at the low-end). Make sure you understand what you're comparing results to if doing so, and also ensure that the same tools are used for analysis. A frame does not necessarily equal a frame between software packages. We trust PresentMon as the immediate future of benchmarking, particularly with its open source infrastructure built and maintained by Intel and Microsoft.

Also note that we are limited on our activations per game code. We can test 5 hardware components per code within a 24-hour period. We've got three codes, so we can test a total of 15 configurations per 24 hours.

Battlefield 1 has a few critical settings that require tuning for adequate benchmarking. Except where otherwise noted, we disabled GPU memory restrictions for testing; this setting triggers dynamic quality scaling, creating unequal tests. We also set resolution render scale to 100% to match render resolution to display resolution. Field of View was changed to 80-degrees vertical to more appropriately fit what a player would use, since the default 55-degree vertical FOV is a little bit silly for competitive FPS players. This impacts FPS and should also be accounted for if attempting to cross-compare results. V-Sync and adaptive sync are disabled. Presets are used for quality, as defined by chart titles. Game performance swings based on test location, map, and in-game events. We tested in the Italian Avanti Savoia campaign level for singleplayer, and we tested on Argonne Forest for multiplayer. You can view our test course in the above, separate video.

The campaign was used as primary test platform, but we tested multiplayer to determine the scaling between singleplayer and multiplayer. Multiplayer is not a reliable test platform when considering our lack of control (pre-launch) over servers, tick rate, and network interference with testing. Thankfully, the two are actually pretty comparable in performance. FPS depends heavily on the map, as always, but even on 64 player-count servers, assuming the usual map arrangement where you never see everyone at once, are not too abusive on the GPU.

Note that we used the console command gametime.maxvariablefps 0 to disable the framerate cap, in applicable test cases. This removes the Battlefield 1 limitation / FPS cap of 200FPS.

In addition to the above (previous testing methodology), note that we have updated to use the latest driver sets at the time of test execution. This includes nVidia 375.70 and AMD 16.10.3. Both have now been superseded by hotfixes, but the optimizations for Battlefield 1 are the same as our test suite.

We conduct 8 test passes per CPU. The first two are discarded, as Battlefield 1 is still enduring sporadic pop-in of assets and presents chaotic performance as a result (see video of this benchmark for an example).

Tests were conducted using ultra settings at 1080p with two GPUs: One was a GTX 1080 FTW Hybrid, used to place emphasis on the CPU performance. Interestingly, the GTX 1080 is powerful enough that just running a lower resolution is enough to demonstrate CPU scaling, and we can leave the graphics options more realistically high. This is new with this generation of GPUs. The second tested card was an RX 480 8GB Gaming X, which gives more of a middle-of-the-road look at things.

The point of running a lower resolution is to show scaling performance. As you increase resolution, load will be placed more heavily on the pixel pipeline and the GPU's ability to draw and sample all of those pixels -- that shifts the load and obfuscates CPU performance. That said, there is something to be said for the real-world aspect of this: If running more demanding quality settings (with reduced settings for CPU-intensive options), it would be possible to run lower-end CPUs with reasonably high settings.

DirectX 12 performance was measured using the onPresent variable from PresentMon. We extract 1% low and 0.1% low metrics using a python script that GamersNexus created. Game graphics are configured to Ultra, 96* horizontal FOV, GPU memory restriction off, and VSync off.

Our other testing methodology, which leverages a 3-minute long test in the Through Mud & Blood map, is detailed in our second set of test results further down the page.

CPUs Tested

Intel CPUs used:

Intel i7-5930K (EOL) w/ X99 Classified & DDR4-2400

Intel i7-6700K ($330) w/ Z170 SLI Plus & DDR4-2400

Intel i5-6600K ($237) w/ Z170 SLI Plus & DDR4-2400

Intel i5-6400 ($182) w/ Z170 SLI Plus & DDR4-2400

Intel i3-6300 ($150) w/ Z170 SLI Plus & DDR4-2400

We tested the 6700K with hyperthreading enabled, then again with it disabled for a better understanding of HT impact in BF1. Memory was Corsair Vengeance LPX.

Intel motherboards used:

AMD CPUs used:

Note that we are using an A10-7870K as a sort-of 880K "surrogate," as the performance is more or less identical, just the 880K disables its IGP for a lower price. The 880K does run about 100MHz faster, but the performance should be effectively equal to the 7870K.

AMD FX-8370 ($185) w/ ASUS 970 Pro Gaming Aura & DDR3-2133

AMD FX-8320E ($125) w/ ASUS 970 Pro Gaming Auro & DDR3-2133

AMD A10-7870K ($145) (880K equivalent) w/ ASUS A88X Pro Deluxe & DDR3-2133

AMD Athlon X4 845 ($65) w/ ASUS A88X Pro Deluxe & DDR3-2133

Memory was HyperX Savage. AMD motherboards used:

Why did you use these parts?

These are the most recent and relevant CPUs and motherboards that we presently have access to in our lab. If a component is missing, it's either because we don't have it or we didn't have time to test it in addition to our tests of the latest components.

Continue to Page 2 for the results.

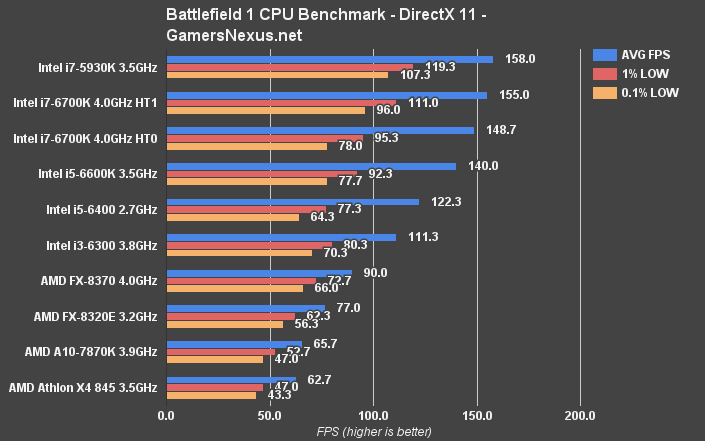

Battlefield 1 CPU Benchmark - DirectX 11 (i7-6700K vs. i5-6600K, i3, FX-8370, X4 845)

We're starting with DirectX 11 -- Dx12 will come next.

The i7-5930K at stock frequencies hit a framerate of about 158FPS AVG, with lows well above 100FPS. The 6700K with hyperthreading enabled is next in line at 155FPS AVG, with 1% lows of 111 and 0.1% lows of 96. There's not a huge gap here, and a good amount of that is because of the stock 6700K's increased clock frequency over the stock 5930K. Interestingly, disabling hyperthreading results in a reduction in average FPS of about 4%, down to nearly 149FPS, but our lows take the biggest hit -- they're now 95 and 80FPS. This isn't that significant in terms of visible framerate; you'll still experience the game more or less the same way, but hyperthreading is clearly beneficial to low values in this test scenario.

Moving down the line, the more mainstream i5-6600K at stock speeds is achieving a 140FPS AVG, landing it about 15FPS behind the hyperthreaded 6700K. This is where we see CPUs beginning to limit the GTX 1080 FTW Hybrid we selected, intentionally used to demonstrate exactly this type of performance scaling. The 6600K's performance trails the 6700K with hyperthreading by about 10%.

Moving down the list, the i5-6400 CPU at 2.7GHz is starting to show a frequency advantage to the 6600K, with a performance disparity of nearly 20FPS between the 6600K and the 6400 i5 CPUs.

Finally, we're met with the i3-6300 at 111FPS, now a full 44FPS behind the flagship Skylake CPU, and the FX-8370 at 90FPS AVG. The 6300's higher frequency is assisting the processor in keeping up with the i5-6400 in low values.

AVG FPS is fairly tightly timed on all these devices, but that has a lot to do with the GPU itself; still, the FX-8370 does well to keep its low framerates within reasonable range of its average, keeping tighter frametimes than the i5-6400. The FX-8370 is about 65FPS behind the 6700K, trailed next by the power-saving 8320E -- as designated by its E suffix -- at 77FPS AVG. The A10-7870K follows this, but note that the 7870K is effectively identical to the Athlon X4 880K. If that CPU interests you or is yours, you could compare it reasonably to the 7870K.

Next, the Athlon X4 845 runs at 63FPS AVG, just a few frames behind where the 880K would be. Although there's a clear choke point in these CPUs when running on a high-end GPU, one useful thing we can learn is that the X4 845 would perform close enough to the 880K that the price difference could be enough to encourage 845 pickups over the 880K.

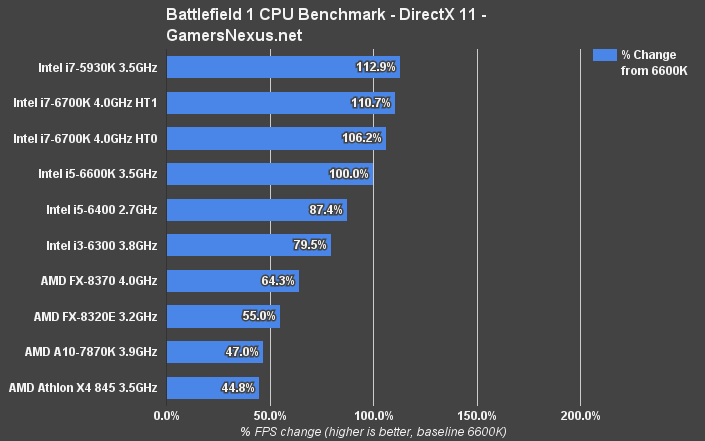

CPU Scaling in Battlefield 1

Let's look at the scaling of all these devices in percentage form for a moment. This chart shows the devices with the i5-6600K as a baseline of 100% performance. This is the important part to note: As stated, we're using a 1080 FTW explicitly to draw-out the limits of the CPUs and see where each chokes as we go further down the stack. You'll want to make sure your CPU choice does not limit the GPU choice, but note also that higher end devices used with higher resolutions, as expected, will put more load on the GPU. Many of these differences between the i5 and i7 devices vanish when moving to 4K on the GTX 1080, and that's because now we're shoving more pixels into the pipe for the GPU.

Scaling in DirectX 11 shows the i5-6600K is about 11% slower than a hyper-threaded 6700K (or ~6% slower with HT off). The FX-8370 is about 36% slower than the i5-6600K. Compared to the FX-8370, the i3-6300 is about ~23-24% faster.

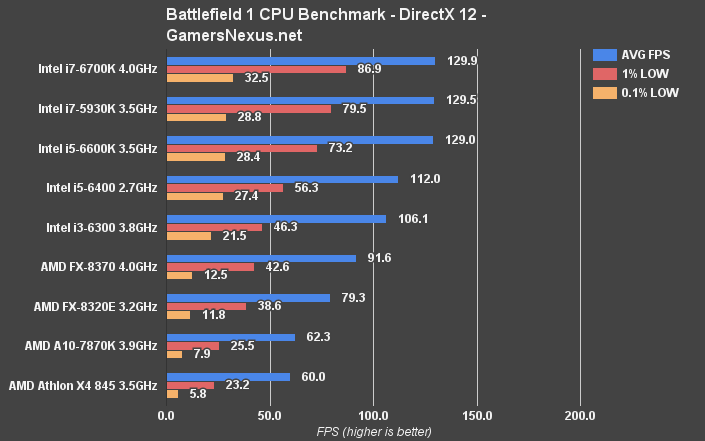

BF1 CPU Benchmark with DirectX 12 - FX-8370 vs. i3-6300, i5-6600K, i7-6700K

DirectX 12 destroys frametime performance at the 1% low and 0.1% low spectrum, and posts negative scaling when moving between APIs with Intel. We see a negative hit of approximately 25FPS on the i7-6700K and i5-6600K. The FX-8370 increases its average by about 1-2FPS but, like all the other devices, posts worse low frame performance. The FX-8320E also posts an improvement of about 2-3FPS average.

The 7870K -- nearly an 880K equivalent -- and X4 845 both see slight negative scaling of about 1-3FPS. These chips use a different architecture and run fewer cores, though.

AMD is performing "less bad" overall versus Intel, but they're both performing below what we'd like to see. We have already discussed BF1's questionable optimization in previous posts.

No Dx12 Gains with Our Tests: Exploring AMD's Testing Methodology

We are confident in these results, but were curious about them. We reached-out to AMD to ask about their own internal tests for Battlefield 1 and, after a few days of back-and-forth and validating each others' tests, we decided to run some more tests with a lower-end GPU. AMD told us that they were seeing gains upwards of 28.5% with an FX-6350 and RX 480, and said that they used a 3-minute long test path on the Through Mud and Blood level. Other than this, their settings were pretty similar to ours. We decided to adopt their methodology for further validation, given the information that we had, to see if the results changed.

AMD's tests were conducted on an older build of Battlefield 1, since they had earlier access, and with pre-BF1 optimization drivers for nVidia devices. Still, this wouldn't explain AMD's reported Dx12 scaling gains when using the RX 480. Maybe for the GTX 1060.

We wouldn't expect it, but it is possible that running the game for a longer period -- like AMD's 3-minute run -- would be enough to begin hitting some sort of draw call or other threshold that you might not encounter with our shorter tests. Given Battlefield 1's already questionable optimization, this seemed worth testing.

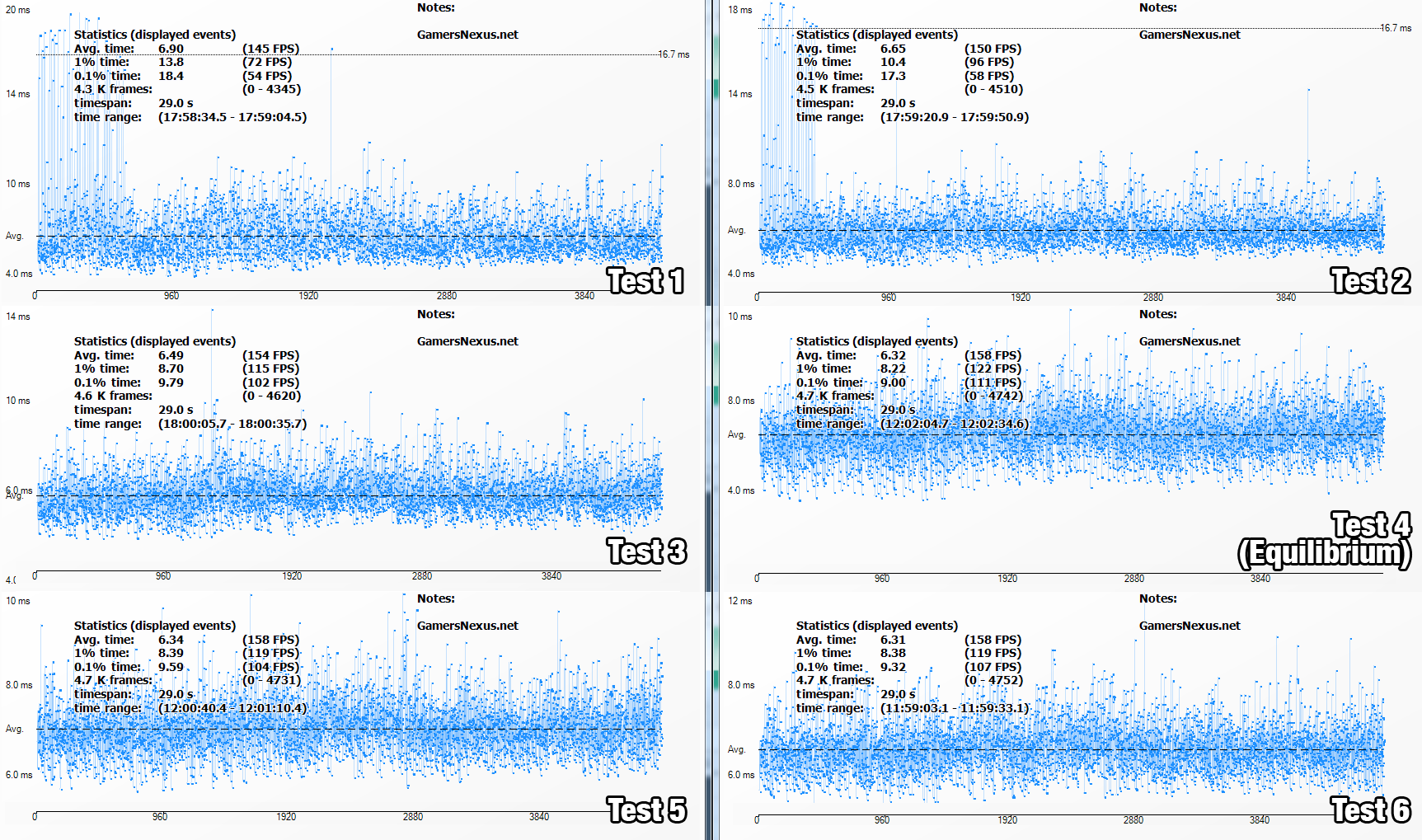

A few more things to note here: AMD ran four test passes per setting, per device, and then averaged all four of those results. In GN's methodology, we run 6-8 test passes, and always throw away the first two sets of data. This is because the game outputs horrifically varied performance when first launched, particularly so with Dx11. We see drastically reduced performance while still loading everything into memory. Some examples of a single device going through multiple test passes are below:

Above: Shows just how much variance there can be in those first few test passes, hence why we discard them.

We believe our test is the most representative of what happens in a multiplayer map, specifically with regard to asset spawning and generation. After you've spawned and run around for a minute or two, performance improves greatly with DirectX 11 across the board. Dx12 also posts a gain, but mostly in the 0.1% low frametime performance (averages are pretty similar). That first run will look like garbage compared to the rest, and so we discard it.

This is even visible when the level is loading. You can see prefab elements at various LODs attempting to pop-in, especially environment elements like foliage and grass.

Now, another point to note: AMD did not provide us with frametime metrics, and only offered us average framerates. We've already shown, as a few other outlets have, that Battlefield 1's DirectX 12 performance can actually be worse in terms of frametimes than DirectX 11, and as a result can stutter throughout gameplay.

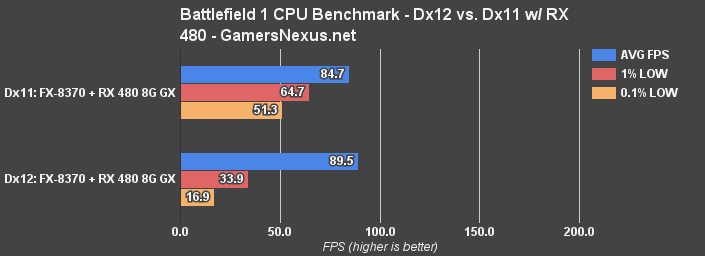

Quick CPU Benchmark with FX-8370 & RX 480 8GB

These tests were conducted on the Through Mud and Blood campaign level, and were tested using a much different methodology. We ran three tests for three minutes each, and did so with highly consistent framerate output because we had practiced the test run and could accurately recreate it each time. These tests were run after receiving input from AMD on their own internal testing of the game.

With an RX 480 and FX-8370, we are still seeing no meaningful scaling. Technically, in this testing, the Dx12 results are about 4% better than Dx11 -- but that's still 4%, and the negative hit to frametimes is still present. Even with these altered test methods, we are not seeing the performance swings that AMD has reported, and we are definitely not seeing favorable frametime performance in Dx12.

Now, this doesn't mean AMD is wrong, and it also doesn't mean we're wrong. There are a lot of ways to test a game, and a whole lot more variables in that testing. Even the test tools and variable extracted from those metrics would impact measurements. Even just the FX-6350 seems to be an odd CPU out for Dx12 scaling, based on additional tests done by Techspot's Steven Walton which show limited/no scaling on an FX-8370, but scaling on an FX-6350. We don't have the latter CPU, unfortunately.

Something else is different in the testing. For one, we don't know all the hardware AMD used -- but they did use an FX-6350, which we don't have. It's possible that there's more of a performance hike with that CPU, though does seem unlikely to account for the entire ~28.5% swing. GamersNexus has spent about a week validating these results because we take very seriously the disparity, and now being fully confident in our testing and having retested in numerous ways and on numerous devices, we are confident that the performance between Dx11 and Dx12 remains largely the same as previously stated. For multiplayer use especially, we are seeing no scaling performance that is noteworthy on DirectX 12, regardless of whose CPU or GPU we use.

This fits our previous testing, which validated extensively the GPU performance with both DirectX 12 and DirectX 11 and saw similar scaling -- that is to say, effectively zero, and negative scaling with frametimes.

Impact of Combat on Benchmarking (Dx12)

We ran some quick-and-dirty benchmarks of just a few CPUs when modifying the load scenario. We can't reasonably perform this benchmark on all devices as replication is difficult, and can't really reasonably bring it into multiplayer (scaling between maps, location on map, players present, and network latency is too variable). Still, we thought this would at least provide a look at performance when engaged in more intense combat scenarios, as compared to the other benchmarks.

These were done with Dx12 only, just in case there's a meaningful difference to be found.

Note that our above tests (with the RX 480) were conducted in a more intense, battle-ridden scenario that was easily repeatable for our test passes. The below tests use our Italian mission methodology, but with combat added.

Here's the raw data, just for future point of reference:

| Intel i3-6300 w/ Dx12 | |||

| AVG FPS | 1% LOW | 0.1% LOW | |

| Intense Battle | 97.3 | 34.3 | 12.4 |

| Travel / No Battle | 106.1 | 46.3 | 21.5 |

Combat shaves off about 8% of performance from the i3-6300 (new-old/old, where old = 'no battle').

| FX-8370 w/ Dx12 | |||

| AVG FPS | 1% LOW | 0.1% LOW | |

| Intense Battle | 78.9 | 25.3 | 7.2 |

| Travel / No Battle | 91.6 | 42.6 | 12.5 |

And finally:

| i7-5930K w/ Dx12 | |||

| AVG FPS | 1% LOW | 0.1% LOW | |

| Intense Battle | 114.9 | 29.4 | 10.4 |

| Travel / No Battle | 129.5 | 79.5 | 28.8 |

Conclusion: Which CPU is Best for Battlefield 1?

Battlefield 1's DirectX 12 performance remains spotty and sub-optimal, regardless of CPU used. The 1% low and 0.1% low performance metrics are poor even when conducting extended benchmark passes for several minutes, and show themselves in stuttering at times.

Regardless, the stack remains mostly the same in terms of hierarchy -- though the high-end i7 and i5 K-SKU devices do perform significantly better with Dx11. The i7-6700K ($330) posts a definitive performance gain over the i5-6600K ($237), at about 10-11% in averages. The i5-6600K runs about 15% faster than the i5-6400 non-K CPU, largely a result of the faster frequency in the 6600K. FX series CPUs and the i3-6300 are struggling to keep up with the graphics card in this scenario, though both are clearly still capable performers insofar as maintaining a high bottom-line performance for someone who may own an otherwise low-end system. The i3-6300 is generally outperforming the FX-8370 in these tests, though.

The X4 845 and X4 880K surrogate post performance that is pretty limited, dragging the GTX 1080 down with them to around 60-65FPS AVG, but would still be playable in Battlefield 1 if reducing settings and running a fittingly low-end GPU -- like an RX 460, GTX 1050, 1050 Ti, or similar. When the battles get intense, though, these devices will begin to choke considerably more than their FX-8000 and i3 counterparts.

Note that multiplayer is more abusive on the CPU, particularly when tracking the ammunition output of 64 players. For now, this is the most representative benchmark we could create given the obvious complexity with benchmarking a multiplayer game. You will want to account for that additional abuse when running multiplayer.

Of course, depending on your GPU, the CPU selection could be less significant. If you're running something like a GTX 1060 or RX 480, that extra 10% performance out of an i7 will become largely irrelevant in the face of a lower-end GPU's limitations. We're looking at memory next, as we suspect that may have more of an impact on Battlefield 1 than we see in some other games. But we'll see.

Editorial, Test Lead: Steve "Lelldorianx" Burke

Video Producer: Andrew "ColossalCake" Coleman