Following the launch of 2GB cards, major board partners – MSI and EVGA included – have begun shipment of 4GB models of the GTX 960. Most 4GB cards are restocking availability in early April at around $240 MSRP, approximately $30 more expensive than their 2GB counterparts. We've already got a round-up pending publication with more in-depth reviews of each major GTX 960, but today, we're addressing a much more basic concern: Is 4GB of VRAM worth it for a GTX 960?

This article benchmarks an EVGA GTX 960 SuperSC 4GB card vs. our existing ASUS Strix GTX 960 2GB unit, testing each in 1080, 1440p, and 4K gaming scenarios.

EVGA GTX 960 4GB SuperSC Graphics Card Specs

| GTX 960 | GTX 960 Strix | GTX 960 EVGA SuperSC 4GB | |

| Base Clock (GPU) | 1126MHz | 1291MHz | 1279MHz |

| Boost CLK | 1178MHz | 1317MHz | 1342MHz |

| Mem Config | 2GB / 128-bit | 2GB / 128-bit | 4GB / 128-bit |

| Mem Speed | 7010MHz | 7200MHz | 7010MHz |

| Power | 1x6-pin | 1x6-pin | 6+2-pin |

| TDP | 120W | 120W | >120W (?) |

| MSRP | $200 | ASUS GTX 960 Strix $210 | EVGA GTX 960 4GB $240 |

First: Note that we will be performing a full review of EVGA's new GTX 960 4GB SuperSC card in short order, including overclocking and thermal performance tests. This is a more limited-scope article with one goal in mind: VRAM utilization analysis.

It's always important to dig through the marketing materials when performing any kind of product analysis. Marketed claims fuel purchases, after all, and need to be carefully tested for validation. EVGA's product page indicates that its SuperSC GTX 960 4GB card ($250) comes “outfitted with 4GB of high-speed GDDR5 memory, giving you higher texture qualities and better 4K performance.” The page goes on to restate this point a few times, primarily indicating high resolution performance as a tie-in to VRAM capacity.

Maxwell's Memory Subsystem Explained

We previously looked into Maxwell's memory subsystem during our GTX 980 review, which drilled into the current-gen platform's architecture. This topic was revisited within our Titan X preview content, though the memory architecture has not changed between the GM200 Titan X chip and GM204 980 chip.

Let's look at it a third time.

Before getting to memory, it's important to have a brief understanding of the GPU driving the GTX 960. The GM206 chip at the heart of all GTX 960 video cards is a slimmed-down version of the GM204, hosting the same underlying architecture and feature-set. The Maxwell SMM is divided into four blocks, each hosting 32 CUDA cores, making for 128 cores per streaming multiprocessor. The GM206 GPU is home to eight streaming multiprocessors, netting a total of 1024 CUDA cores (32 cores * 4 blocks * 8 SMs = 1024).

The reference GTX 960 shipped with 2GB of GDDR5 memory at launch, transacting on a 128-bit bus and effective 7010MHz operating clock (1753MHz native). Memory bandwidth calculations are explained in our GPU dictionary, but by dividing the memory bus width by eight (conversion to bytes) and then multiplying by the memory clock, then by 2 for DDR, then by 2 again for GDDR5, we get a memory bandwidth of 112.19GB/s. This makes the GM206 one of the most memory-limited nVidia GPUs on the market, though Maxwell's memory subsystem allegedly makes up for the on-paper limitations with compression optimization.

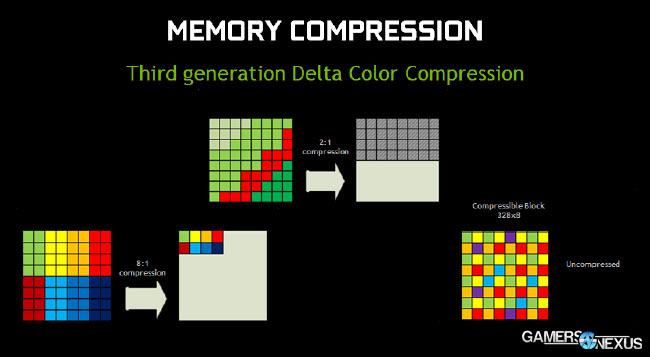

Even with only two 64-bit memory controllers (128-bit memory interface), the GM206's interface is capable of outperforming the 192-bit interface of the GK106 GPU; this is largely thanks to optimization in the memory pipeline by nVidia. Among other features, optimizations include memory compression techniques like third-generation delta color compression, which temporally analyzes the color delta between multiple frames, then applies updates using the delta value rather than absolute values. This, we're told, contributes to an overall 25% reduction in transacted bytes per frame vs. the Kepler architecture. Delta color compression is responsible for approximately 17-18% of this total reduction on its own, hence nVidia's derivation of an “effective 9Gbps” memory speed (equivalency to Kepler), although the hard spec for the GM206 is actually 7Gbps.

What Does Extra VRAM Actually Do?

Greater video memory capacities enable gaming scenarios that are asset-intensive and would otherwise saturate the framebuffer. Video memory is a volatile form of storage (like system memory) that fetches and retains highly frequented items for expedited access as the game calls upon those items. An example of something that might get stored in video memory would include texture files for the active game cell. Video memory is also fed assets like shadow maps, normal maps, and specular maps that are needed for every frame rendered; these items were discussed in our recent Crytek interview about physically-based rendering, if you're curious.

More limited memory capacity used to store textures of massive resolution could cache-out more frequently and would need to rapidly dump and fetch items on an on-demand basis. This action occurs as an exchange between the much slower system memory and the GPU's memory, increasing overhead and latency by getting forced through the PCI-e bus over long distances. Exceeding GPU memory results in somewhat staggering framerate drops that become jarring and noticeable to the user, something we'll discuss in the benchmark results below.

Memory Bandwidth Limitations & Solutions

Even with Maxwell's efficiency gains over Kepler, a 128-bit memory bus is still relatively small. This raises a concern of whether or not the GM206 is capable of transacting memory rapidly enough to adequately utilize a 4GB buffer. Overclocking – something we'll test in the forthcoming review – will increase memory bandwidth by way of increased clockrate, but the gains are yet unexplored. It is also up for investigation whether the GTX 960's overall performance output can sustain framerates high enough to encounter a memory bottleneck, rather than bottlenecks elsewhere in the pipe.

The Tests: Resolution Scaling & FPS Consistency

We conducted a large suite of real-world tests, logging VRAM consumption in most of them for comparative analysis. The games and software tested include:

- Assassin's Creed Unity (Ultra, 1080p only).

- Far Cry 4 (Ultra, 1080, 1440, 4K).

- Cities: Skylines (High, 1080, 1440, 4K).

- GRID: Autosport (Ultra, 1080, 1440, 4K).

- Metro: Last Light (Very High + Very High tessellation on 1080; Very High / High on 1440; High / High on 4K).

- Battlefield: Hardline (Ultra, 1080, 1440, 4K).

- 3DMark Firestrike Benchmark

We already know ACU and Far Cry 4 consume massive amounts of video memory, often in excess of the 2GB availability on our tested ASUS Strix card. GRID: Autosport and Metro: Last Light provide highly-optimized benchmarking titles to ensure stability on the bench. Battlefield: Hardline is new enough that it also heavily eats RAM, though we had some difficulty logging FPS in the game (explained below, along with our workaround). 3DMark offers a synthetic benchmark that is predictable in its results, something of great importance in benchmarking.

We added Cities: Skylines to the bench to diversify the titles tested. Our objective with these tests is to observe a sweeping range of titles that users would actually play, so moving away from the heavy focus on single-entity games (FPS & RPG titles) and into a zoomed-out management game assists in that. Cities: Skylines is among the most desirable titles to play on 4K resolutions right now; most of these other games exhibit various usability issues at 4K – like UI scaling improperly or textures stretching to the point of looking worse. At 4K resolutions, Cities looks better at greatly zoomed-out vantage points and allows the player to view more of the usable gaming space at any given time, ensuring a real-world, desirable 4K gaming scenario.

Games with greater asset sizes will spike during peak load times, resulting in the most noticeable dips in performance on the 2GB card as memory caches out. Our hypothesis going into testing was that although the two video cards may not show massive performance differences in average FPS, they would potentially show disparity in the 1% low and 0.1% low (effective minimum) framerates. These are the numbers that most directly reflect jarring user experiences during “lag spikes,” and are important to pay attention to when assessing overall fluidity of gameplay.

Continue to page 2 for test results.

GPU Test Methodology

We tested using our updated 2015 GPU test bench, detailed in the table below. Our thanks to supporting hardware vendors for supplying some of the test components.

The latest 347.88 GeForce driver was used during testing. Game settings were manually controlled for the DUT. Overclocking was neither applied nor tested, though stock overclocks (“superclocks”) were left untouched. The two devices tested are not identical in clock speeds, and so some small disparity may be reflected in FPS output as a result of clock speeds.

VRAM utilization was measured using MSI's Afterburner, a custom version of the Riva Tuner software. Parity checking was performed with GPU-Z. FPS measurements were taken using FRAPS where possible. In the case of Battlefield: Hardline, which did not accept FRAPS input, we used MSI Afterburner to measure average FPS and did not calculate percentage lows.

FPS logs were analyzed using FRAFS, then added to an internal spreadsheet for further analysis.

Each game was tested for 30 seconds in an identical scenario on the two cards, then repeated for parity.

| GN Test Bench 2015 | Name | Courtesy Of | Cost |

| Video Card | EVGA ASUS | $240 & $210 | |

| CPU | Intel i7-4790K CPU | CyberPower | $340 |

| Memory | 32GB 2133MHz HyperX Savage RAM | Kingston Tech. | $300 |

| Motherboard | Gigabyte Z97X Gaming G1 | GamersNexus | $285 |

| Power Supply | NZXT 1200W HALE90 V2 | NZXT | $300 |

| SSD | HyperX Predator PCI-e SSD | Kingston Tech. | TBD |

| Case | Top Deck Tech Station | GamersNexus | $250 |

| CPU Cooler | Be Quiet! Dark Rock 3 | Be Quiet! | ~$60 |

We strictly looked at capacity performance of the GTX 960, so other video cards are not shown in these tables. In effort of consolidating graphs and data presentation, we've listed multiple resolutions per graph where applicable.

Average FPS, 1% low, and 0.1% low times are measured. We do not measure maximum or minimum FPS results as we consider these numbers to be pure outliers. Instead, we take an average of the lowest 1% of results (1% low) to show real-world, noticeable dips; we then take an average of the lowest 0.1% of results for severe spikes.

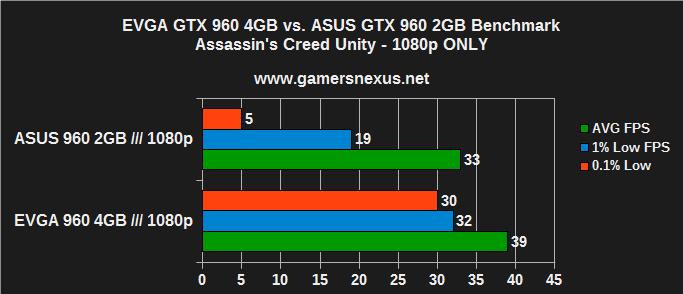

Assassin's Creed Unity: Massive Improvement with 4GB GTX 960

Assassin's Creed Unity is the poster-child of memory capacity advantages. The game regularly capped-out our available memory on the 4GB card and fully saturated the 2GB card. This saturation results in memory swapping between system RAM and the GPU's memory, causing the massive spikes reflected by the 1% low and 0.1% low numbers.

In this scenario, Assassin's Creed Unity has a massive performance differential between the 4GB and 2GB options, to the point that 4GB of VRAM will actually see full utilization and benefit to the user's gameplay. Despite similar average FPS numbers, ACU exhibited jarring, sudden framerate drops with the 2GB card as memory cycled, effectively making the game unplayable on ultra settings at 1080p. The 4GB card had an effective minimum of 30FPS and an average of 39FPS, making for a generally playable experience. Settings could be moderately tweaked for greater performance.

A 4GB card has direct, noticeable impact on the gaming experience with ACU.

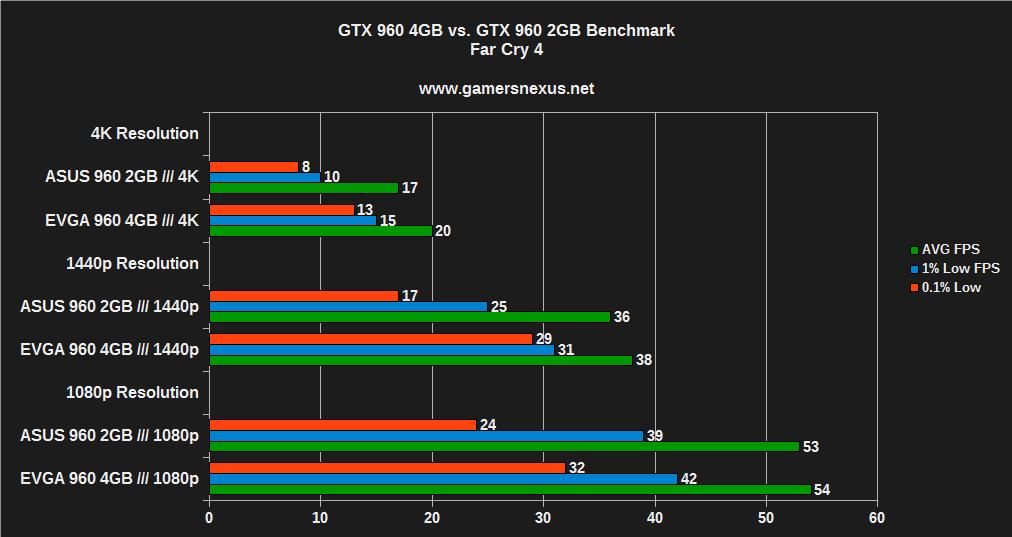

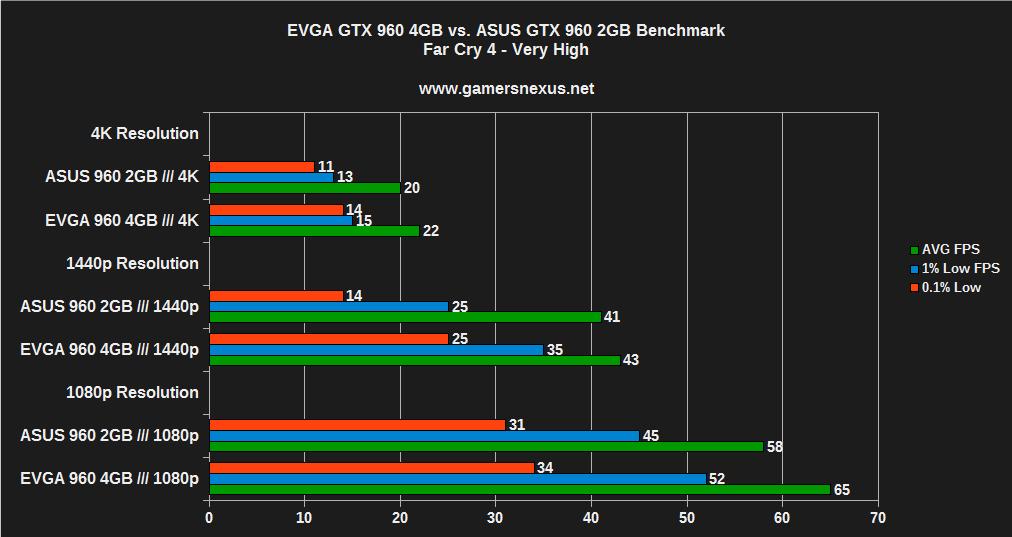

Far Cry 4 GTX 960 Benchmark – Less Exciting, But Useful at 1440p

The above is for "Ultra" settings. Below is "Very High," a chart requested by redditor "SPOOFE."

Far Cry 4, also a Ubisoft game, has a far less exciting chart. The 4GB model doesn't have as large of an impact on average FPS at any of the posted resolutions and is low-performing enough to be irrelevant at 4K. We saw a 52% gain in 0.1% low performance using the 4GB card at 1440p (Ultra), decreasing jarring frame drops substantially and improving overall fluidity of experience.

It should be noted that even at 40FPS, Far Cry 4 isn't particularly enjoyable; we'd have to drop the settings closer to “very high” or “high” to be happy with performance, as the game plays best closer to 50-60FPS. The chart still reflects relative performance gains of 4GB over a 2GB unit.

At 1080p, performance is high enough with each card that memory capacity shouldn't necessarily be a driving purchasing factor.

Cities: Skylines GTX 960 4GB Benchmark

We loaded the GTA: Los Santos user-created map for Cities: Skylines testing, producing an easily replicated flyover of the city for testing.

Performance at 4K and 1440p were effectively identical with each card, making little argument for the extra expense of the 4GB card. Cities: Skylines simply does not use enough memory for memory capacity to see relevance, even at higher resolutions. Performance exceeded 60FPS average at 1080p.

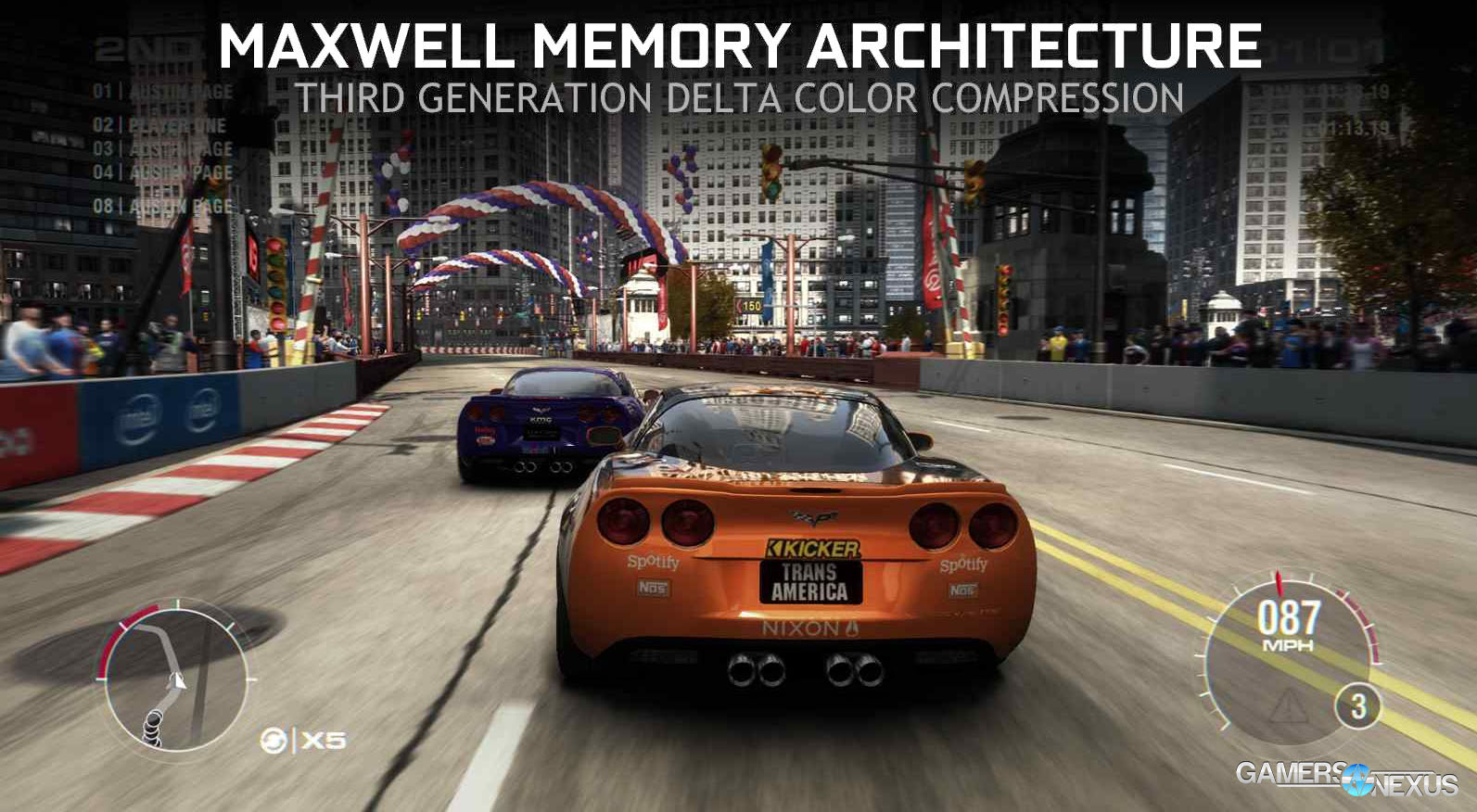

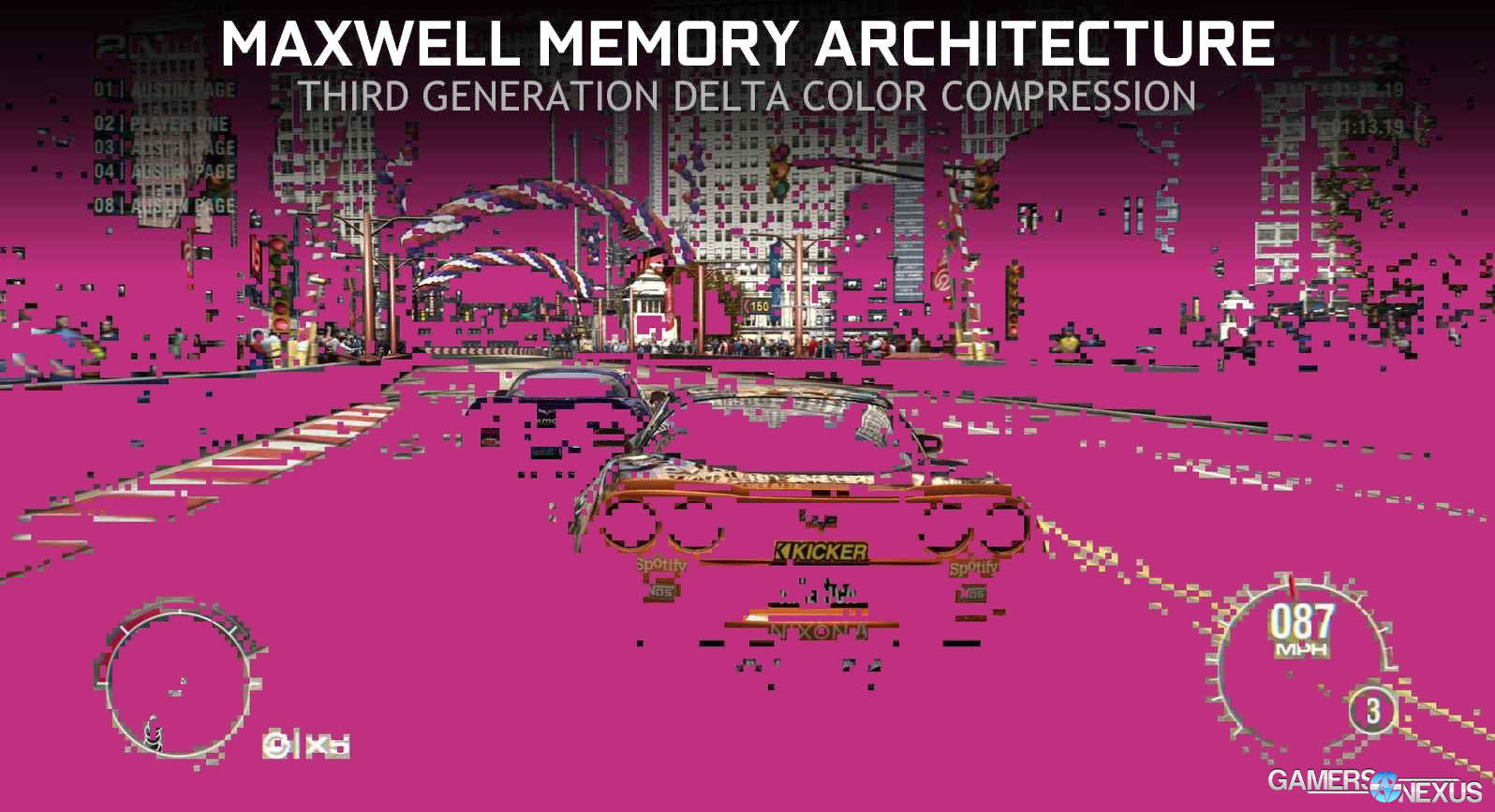

GRID: Autosport GTX 960 Benchmark – No Advantage

GRID: Autosport featured largely similar performance to Cities, although 4K performance did have marginally less severe 0.1% spikes. Even at 4K resolution, as above, there is very little argument for the 4GB model.

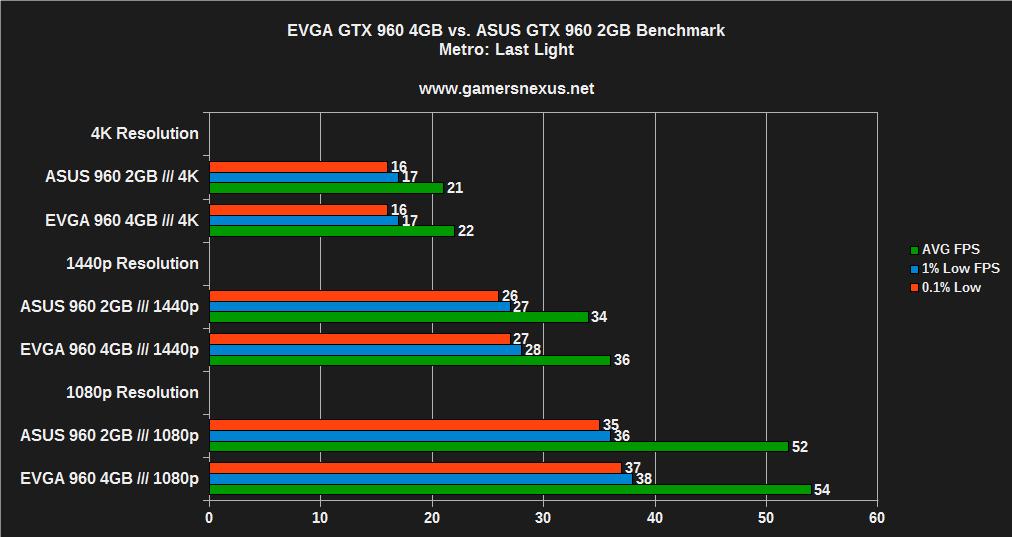

Metro: LL 4GB vs. 2GB 960 Bench – More VRAM isn't “More Better”

Note that Metro: Last Light is so heavily optimized for benchmarking (on the software and driver sides) that it is our most reproducible test on the bench, but doesn't necessarily show hardware advantage in these scenarios. Using various settings (defined in the methodology above) and resolutions, Metro: Last Light sees absolutely no benefit of a 4GB video card.

Battlefield: Hardline Benchmark – Noticeable at Higher Resolutions

Battlefield: Hardline did not accept FRAPS input, so we used MSI Afterburner for FPS logging. BFHL saw a massive performance disparity at 4K, though each card remains unplayably slow at such a resolution. With tweaked (medium-high) settings, the 4GB card would achieve a playable FPS sooner than the 2GB card at 4K resolutions.

1440p gains are noticeable at times of FPS spikes when using the 4GB card, though both devices perform relatively well at 1440p with Ultra settings.

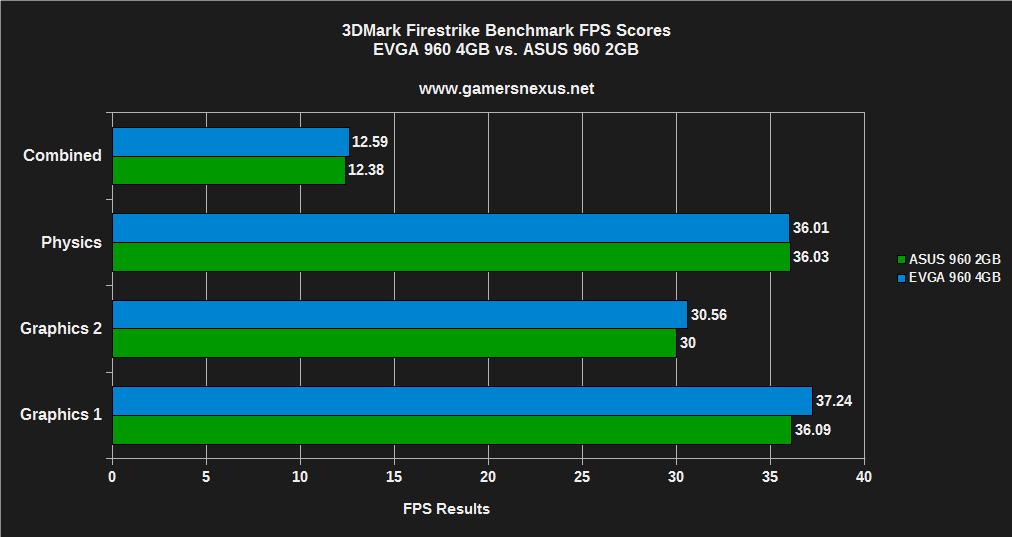

3DMark Firestrike Synthetic Benchmark

This test used 3DMark's Firestrike for testing, a utility we recommend to system enthusiasts.

Performance was effectively identical between the two cards using the highly-intensive 3DMark Firestrike benchmark.

Maximum VRAM Consumption Measured with 4GB Card

This chart shows VRAM consumption of a few of the more intensive games above. We placed GRID on the chart to show its scaled memory consumption based upon resolution. We have not yet tested BFHL, ACU, or FC4 on our Titan X, but will soon do so to see whether memory consumption exceeds 4GB.

Games that consumed more memory showed larger performance disparities in 0.1% and 1% low times.

Conclusion: Will Games Use a 4GB GTX 960 Graphics Card?

The answer to the “is a 4GB video card worth it?” question is a decidedly boring “it depends.” As above, some games – like ACU – will actually make noteworthy gains with additional memory, while others are completely disinterested in the added capacity. For the most part, games are either optimized in such a fashion that additional VRAM offers no net gain or consume low enough capacities that it is largely irrelevant.

In the few above cases where an added 2GB vastly improves performance, it may be an arguable case that users instead invest in a higher-end GPU altogether, though that becomes a cost analysis argument that is based on the buyer's budgetary situation.

The 4GB GTX 960 ($240) can see noticeable, massive performance gains in the right situations. If you're playing the games that would see advantage from a 4GB card, it's worth considering an additional $30 for the purchase. For those who are interested in less demanding or better optimized titles (Metro, GRID), like some of those tested above, a 2GB Strix card ($210) sees effectively identical and impressive performance compared to the 4GB card.

- Steve “Lelldorianx” Burke.