NVIDIA’s G-Sync variable refresh rate hardware was first announced back in October, but has been making the rounds at CES 2014; we wrote about ASUS’ new 1440p G-Sync enabled display – one of the first of its kind (and, you know, $800) – and BenQ’s 1080p offerings, but now it’s time to look more specifically at the practical impact of G-Sync and how it works.

We had a chance to talk with NVIDIA G-Sync Technical Marketing Specialist Vijay Sharma at CES 2014, who was apt to break-down the basics of G-Sync, the stuttering and tearing that results from V-Sync toggles, and its relevance to gamers. Here’s the video example of frame stuttering and tearing, shown against the relative smoothness of G-Sync.

To quickly recap what Sharma covers above, we first look to the slide deck from NVIDIA’s Montreal event from October, when G-Sync was unveiled:

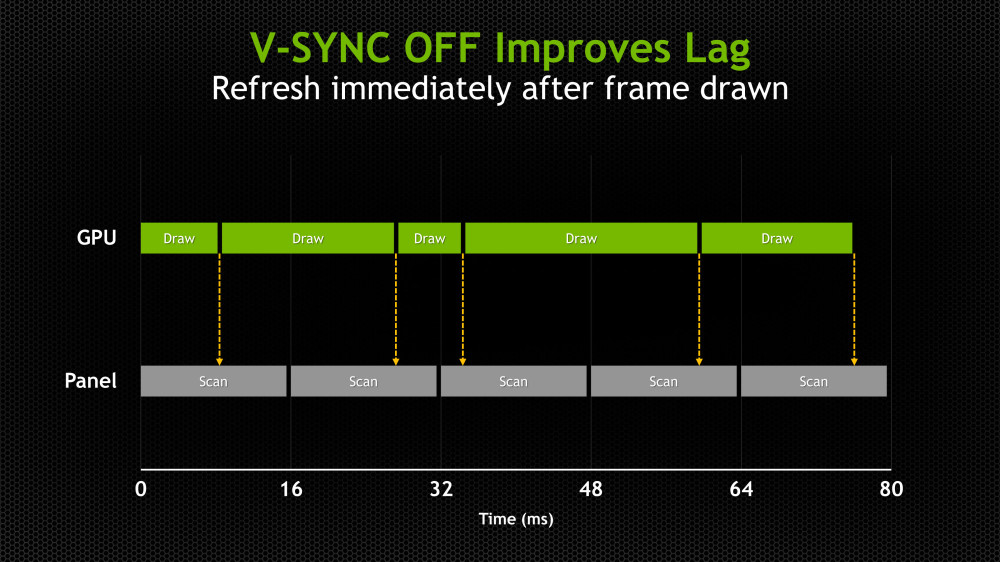

In traditional LCD/GPU setups, the monitor is constantly refreshing at a fixed vertical rate. If you’ve got a 60Hz monitor (fairly standard), it’s going to refresh about once every 16ms; this means that as the user, you’ll want a new frame to be rendered and dispatched down the pipeline by the GPU every 16ms. If the GPU takes longer than this refresh window to finalize the frame – say 19ms or 20ms – the monitor will take one of a few actions dependent upon settings.

With V-Sync enabled – which ties the framerate to the refresh rate (frequency) of the display – we can experience frame stutters. This happens when there’s a visible “skip” in your output. It almost feels like lag in nature, but is really just frame that’s been repainted twice. Because the GPU wasn’t ready to deliver the next frame to the monitor within our 16ms “agreement” window, the monitor will now re-display the previous frame a second time. This is what causes the appearance of physical stutters in movement, since by the time the frame is done rendering, you’ll have moved further in the game and the control input will feel temporarily mismatched to the visual output.

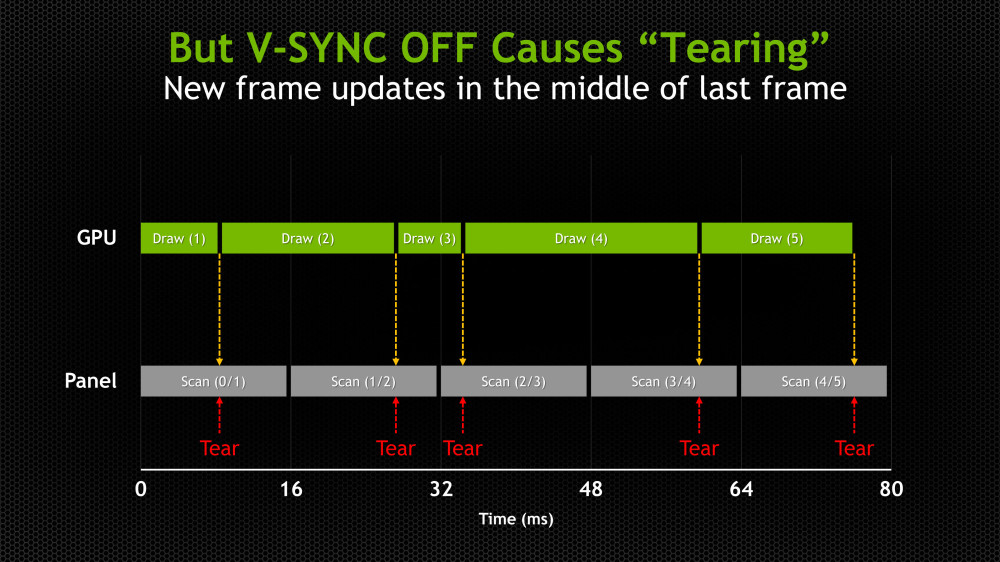

And that’s why a lot of gamers – competitive especially – play with V-Sync disabled. What happens here, though, is we end up with tearing instead of stuttering. A tear occurs when the display paints multiple frames on the scene at one time. In essence, you might have a third of the previous frame, a third of the current frame, and a third of the impending frame all painted simultaneously, split down the display. This will generate very noticeable jagged lines that cut through the landscape in the game. If you’ve ever seen a tree, a building, or some other on-screen object get sort-of sliced in a jagged manner (almost as if the trunk of the tree is misaligned), that’s because you’re seeing multiple frames painted “on top of” one another. There is no grace in this, and we end up with somewhat mismatched objects that don’t quite fit.

The reason this is preferable to stuttering for most gamers is because we can mentally adjust for tearing by compensating with control input; as a skilled gamer, you’d have a reasonable expectation of opponent movement and could passively, mentally assemble the frames to determine the actual in-game location of the enemy.

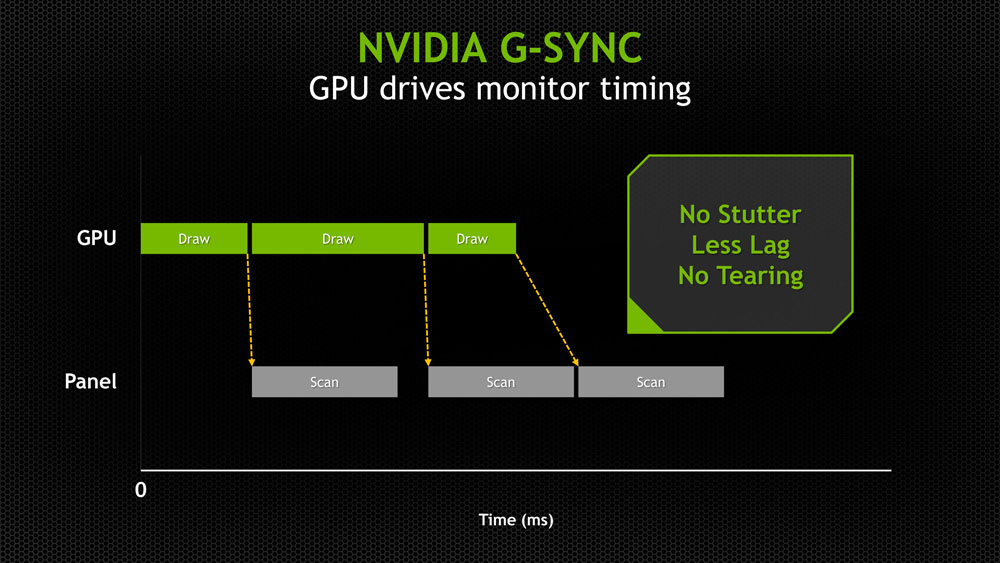

G-Sync eliminates all of this (and in theory, AMD’s new FreeSync will help in many similar ways). G-Sync slaves the monitor to the GPU, rather than allowing them to work independently; with this in mind, we know that the GPU will now tell the monitor to refresh only when it has prepared a new frame for display – never before. This results in significantly smoother movement and scene transition, as noted in our video b-roll (watch the mountain toward the right of the screen).

If you’ve got any questions about G-Sync or other nVidia tech, let us know below!

- Steve "Lelldorianx" Burke.