NVIDIA's keynote today kicked off with Linkin Park's appropriately-loud "A Light That Never Comes." We’ve been at nVidia’s GTC since yesterday, but today is the official kick-off of the GPU Technology Conference with nVidia CEO Jen-Hsun Huang; Huang hosted today’s GTC Keynote – part of nVidia’s annual hype parade – to discuss advancements in GPU technology, SDKs for gaming applications, machine learning, PCIe bandwidth, and the new architecture after Maxwell (Pascal). We’ll primarily focus on the GPU technology and Pascal here – I have a full-feature article pending publication that covers all of the SDK information.

The big news is the announcement of nVidia's Pascal architecture, the architecture that will follow-up on Maxwell, Titan Z specifications, and NVLink.

NVidia did not dive into information on the GM110, as some speculated, and instead focused on upcoming technology (read: far future).

NVLink, Memory Bandwidth, Heterogeneous Chips, & Pascal Architecture

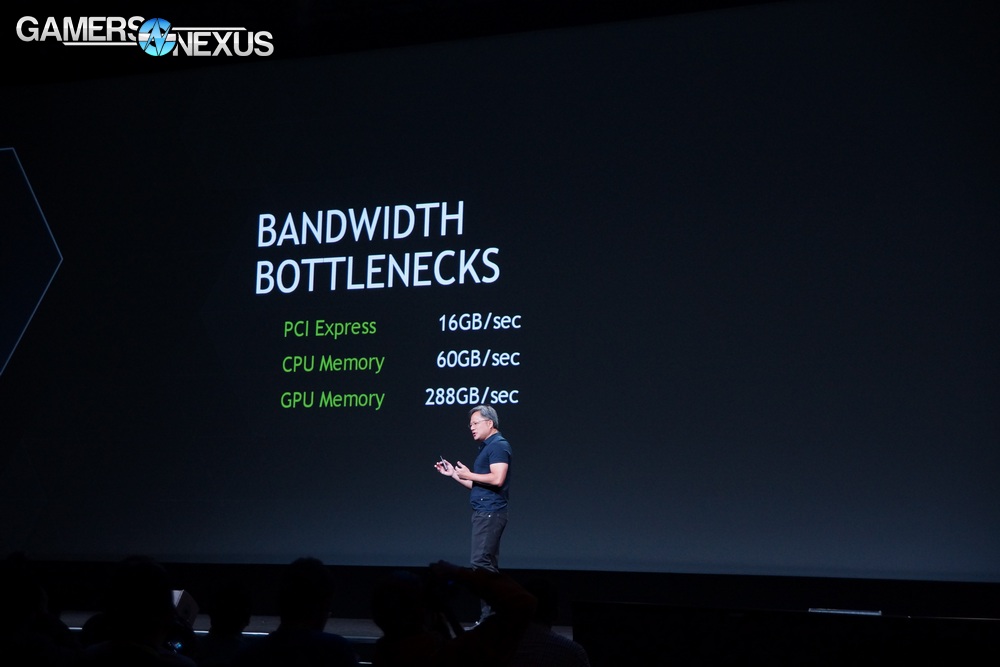

NVLink was announced immediately. Huang pointed-out that the greatest bottleneck between the CPU bandwidth, GPU bandwidth, and the interface (PCIe) is, in fact, the PCIe interface that's stuck at 16GB/s.

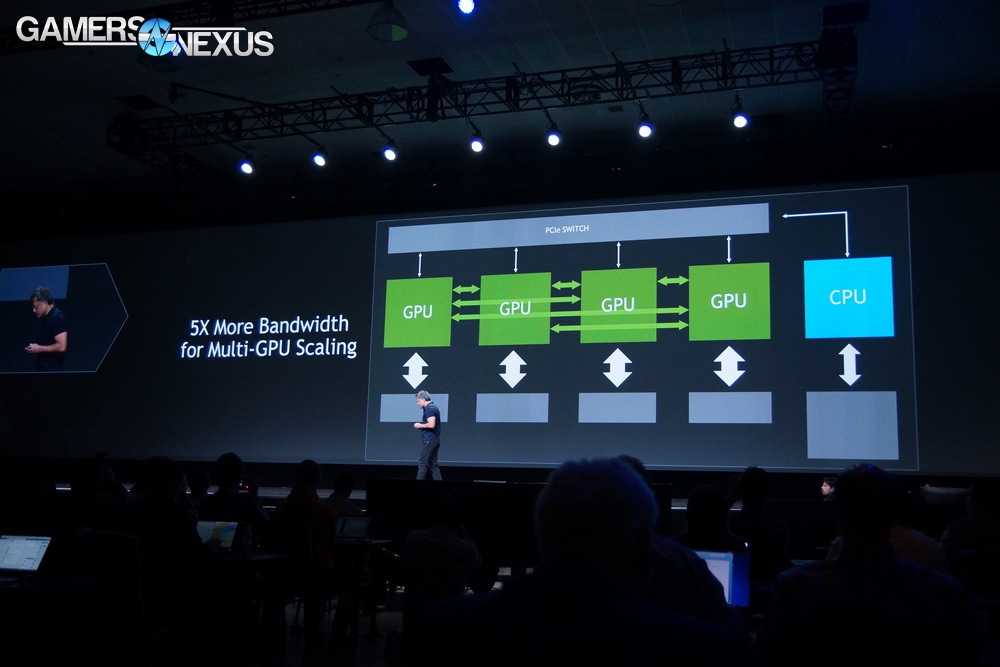

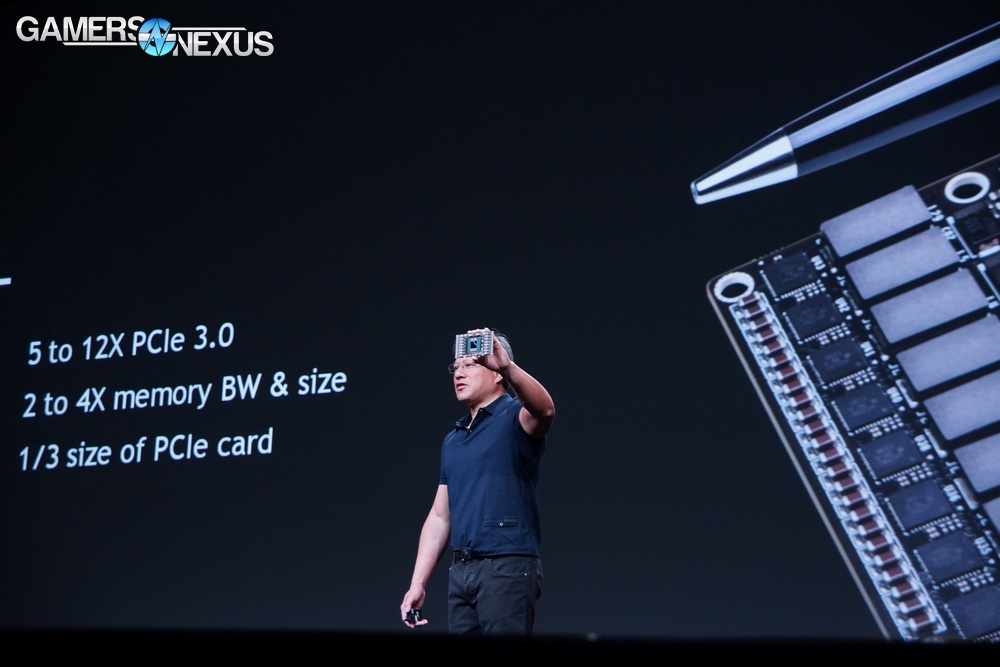

NVLink is a new PCIe programming model with DMA+, enabling unified memory between the CPU and GPU and second-generation cache coherency between the caches. NVLink reportedly increases throughput/bandwidth and performance by 5x-12x on PCIe 3.0. NVidia calls this a "big lead" on solving the PCIe bottleneck, further emphasizing that although the GPU's bandwidth already far-and-away exceeds the CPU, memory, storage, and PCIe bus, that doesn't preclude the GPU from being able to utilize even more bandwidth for on-chip computations. It is worth noting that a video card, at this point, is effectively a self-contained computer - though it obviously still communicates with the rest of the system. NVLink can also be used to accelerate GPU-to-GPU communications.

Jen-Hsun Huang called NVLink "the first enabling technology of our next-generation GPU." He pointed out that other solutions could have been used, like increasing the signal power on the GPU - but that'd increase energy consumption, or maing the bus wider - but that'd require a larger GPU package.

3D chip-on-wafer integration increases bandwidth, capacity by 2.5x, and improves energy efficiency by 4x, but doesn't require additional package size or power. 3D memory and 3D packaging with heterogeneous chips will theoretically increase bandwidth substantially without negatively impacting size and power consumption.

"This is one of the greatest GPUs we've ever made. It incorporates these two enabling technologies. We're testing it rigorously now and we're excited about the results. We should name this chip after a scientist -- Blaise Pascal."

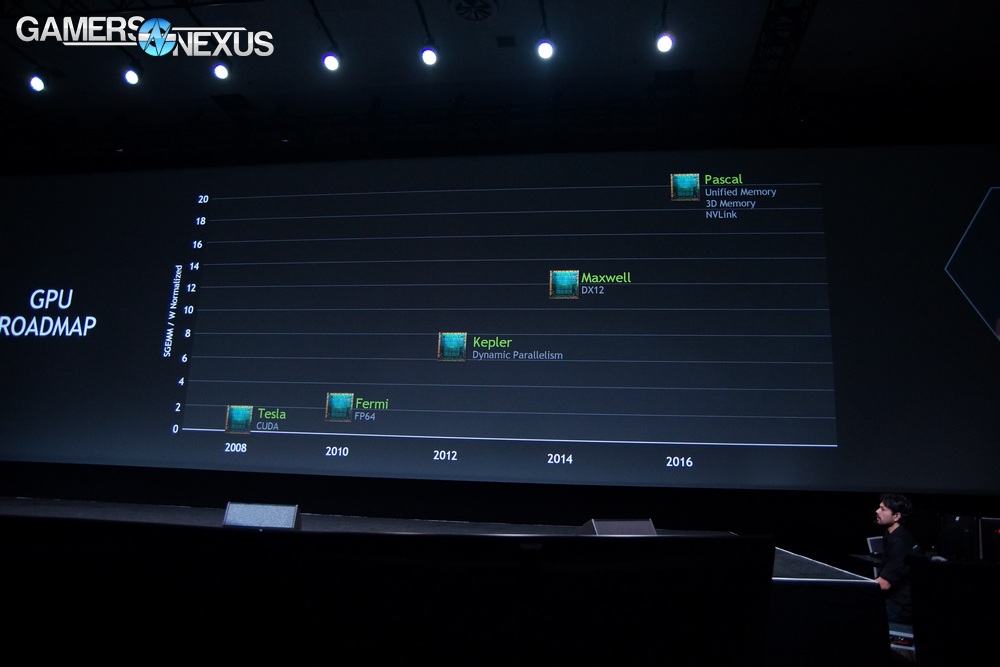

NVidia Pascal Roadmap

The roadmap leading through Pascal now looks like this:

Google Brain & Machine Learning

Huang then dove into machine learning -- the act of a computer learning in the manner a human might -- by exposing Google's Brain project to 3 days' worth of YouTube. Probably a dangerous thing. Google Brain realized that, after 3 days of YouTube, the two most important things to recognize are human faces and cat faces. Huang's point was that with GPU-accelerated computing and machine learning, using new NVIDIA tech (what else?), a much cheaper, much more accessible "Google Brain-equivalent" can be achieved to advance scientific communities. This was labeled "Deep Learning with HPC Systems." It reportedly took Google 1000 servers worth $5M to teach its Brain how to recognize cats and human faces; NVidia says that their new technology can drop that down to 3 GPU-accelerated servers with 12 GPUs in total, equipped with 18000 CUDA processor cores and 16000 CPU cores.

"The computational density is on a completely different scale: it's 100x less energy, 100x less cost. Because of this breakthrough, any research department, any researcher, any company, can do machine learning at a scale of the project that was done at google called the Google Brain."

Machine learning will be a topic to pay attention to in the future, but isn't immediately impacting our gaming endeavors. It is a good insight as to where the industry will inevitably tail, though.

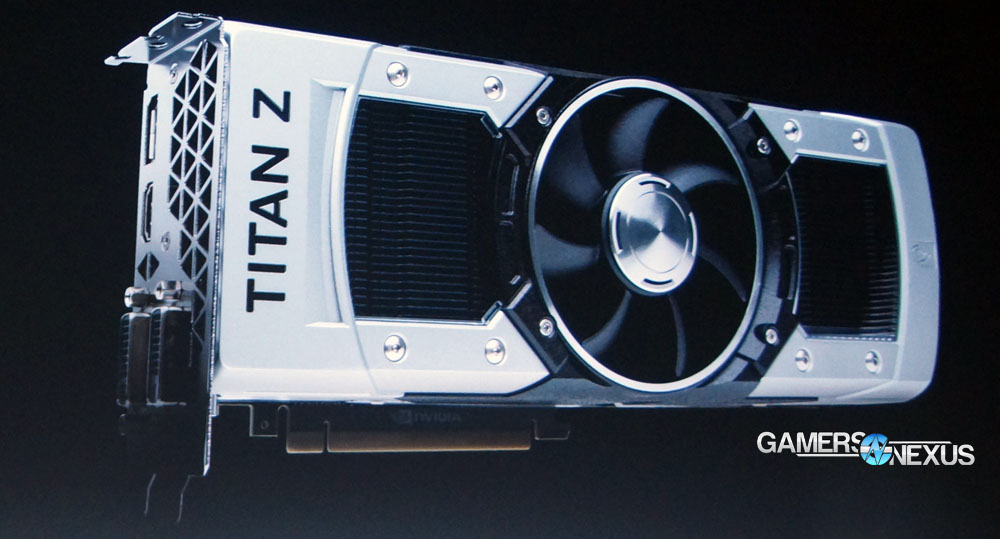

NVIDIA Titan Z Specs, MSRP

Update: There was a misunderstanding somewhere between the presentation and the audience -- the Titan Z will not require the insane amount of power we initially thought it would. We don't know the TDP yet. There are conflicting reports on nVidia's site about the power consumption, so have removed the TDP spec completely for now and will update ASAP.

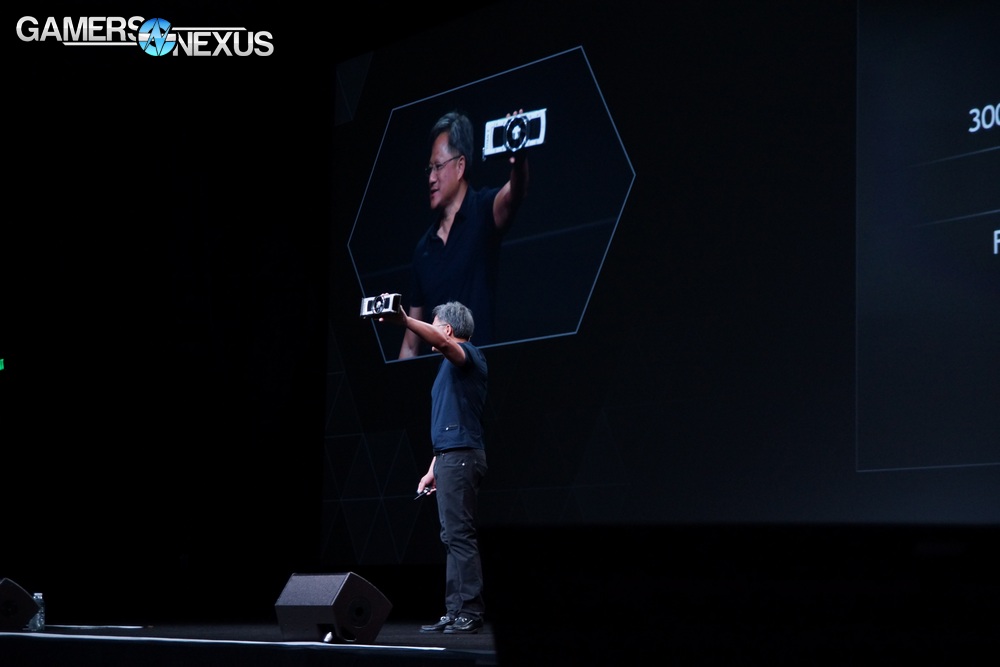

Huang didn't spend too much time on the Titan Z, but he did showcase a prototype of the card and gave us some specifications. The Titan Z remains targeted at the high-end scientific audience and will retain at least double-precision FP functionality. The limited specs we've been given are as follows:

- 5760 CUDA cores

- 12GB memory

- 8TFLOPS

- $3000

- 2xGK110s

The Titan Z is not intended for mainstream consumer or gaming use; its specs are indicative of its target toward the scientific and research community. NVidia demonstrated volumetric fire effects on 32 million voxels, followed-up with volumetric smoke, airstream, and water effects, all of which will find their way into games in short order as FLEX advances. We have a full article on FLEX coming up soon. So although the Titan Z might not be meant for you, it has enabled the progression of high-end graphics development -- directly impacting the direction of game engines and devs. An updated Epic Infiltrator demo was used to showcase this, using water simulators, particle simulators, destruction simulators (tracking force application).

"Simulation and visualization coming together - this is the future of graphics; you're getting a glimpse of it here with Unreal Engine 4 running on Titan Z."

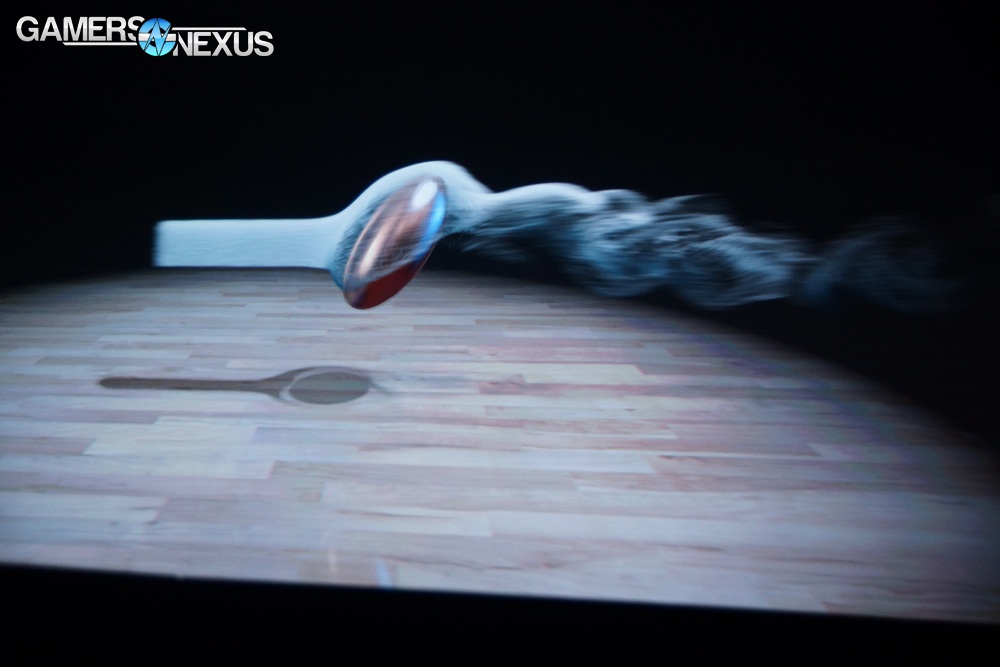

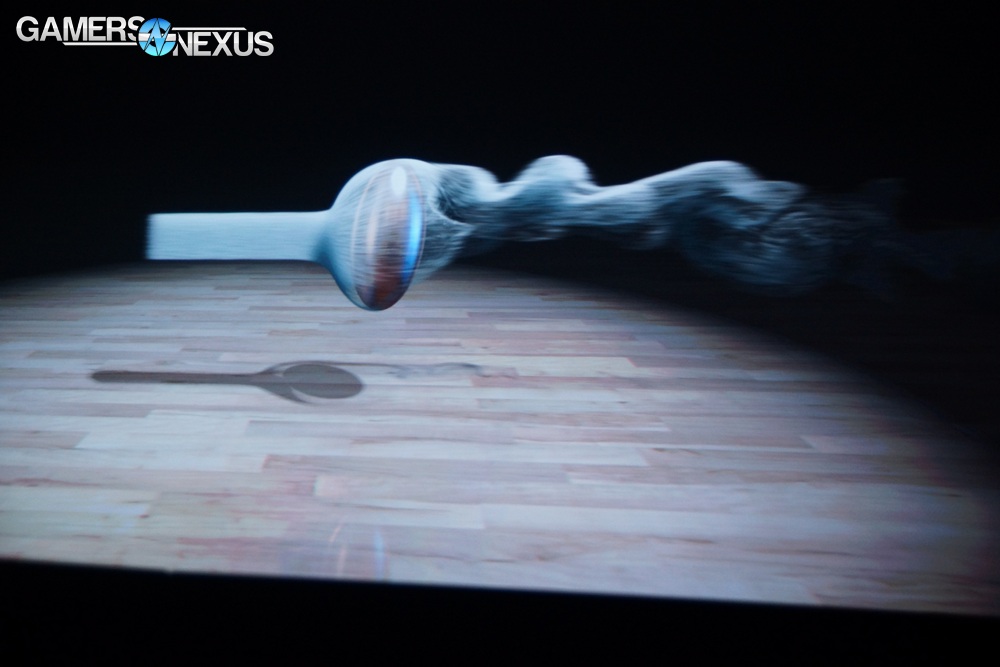

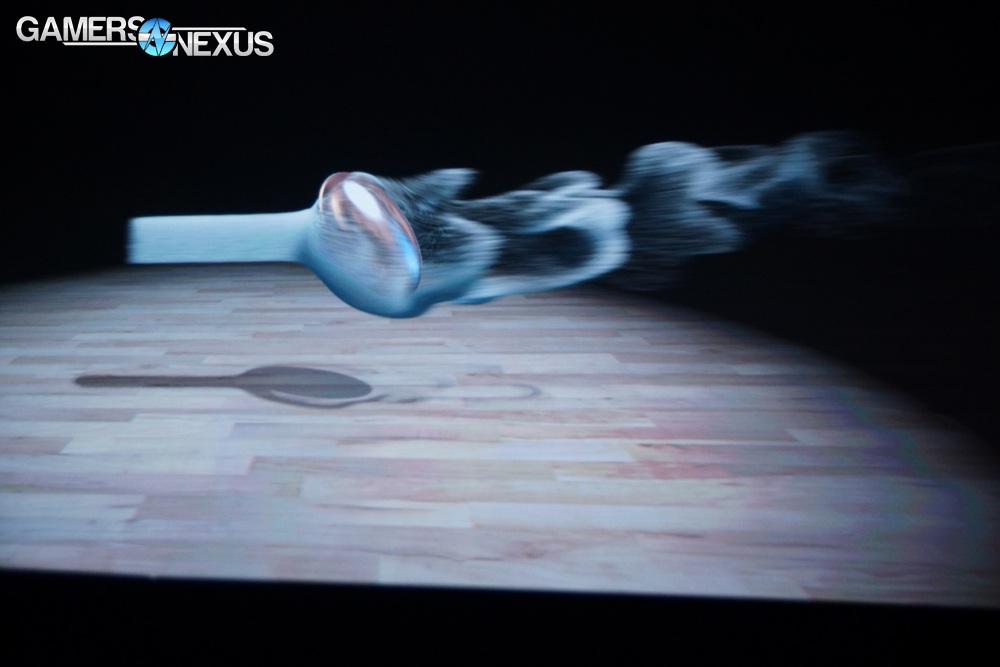

To give an idea of what FLEX is capable of, here's a series of 3 photos in sequence while nVidia rotated a copper bullet around within a stream of fluid (air/sand, basically). You'll notice that the wake is dynamically changing with the location of the object and the stream of fluid morphs around the impassible bullet. Again, more on this very shortly - keep eyes on the front page and our social media.

This is already working its way into games -- including the Unreal Engine 4 (which is now very affordable for devs) -- and runs in an optimized manner for gaming systems.

NVidia GRID & GPU in the Cloud

The biggest challenge with GRID has been the latency between the GPU server cluster and the receipient's local system, which is streaming data between itself and the server cluster in order to render remotely. NVidia will be introducing vGPU (Virtual GPU) in partnership with VMWare, who are handling the software; vGPU sits on top of GRID as a stack of machines to virtualize computing power for connected users. This can be thought of in a somewhat similar manner to a Virtual Private Server for the web, where each user sees an independent, owned slice of hardware, but the actual zoomed-out stack is shared between potentially hundreds of users. As with the SDK / FLEX information, we've got a video + article pending publication about GRID as it pertains to gaming. vGPU is primarily an enterprise thing right now, but will find its way into gaming eventually.

NVidia’s Core Focus: SDKs, gaming software, and power optimization

NVidia's core focus of the GTC 14 keynote seemed to center on power efficiency of the new HPC and high-end devices (see: Tegra K1 advancements, Titan Z for supercomputing), gaming SDK overhauls for developers (volumetric FX), and Pascal architecture.

We’ll have video and further conference & keynote information online shortly, so keep an eye on our tweets, facebook page, and subscribe to our YouTube channel.

- Steve "Lelldorianx" Burke.