How VR Works: Frametimes, Warp Misses, and Drop Frames w/ Tom Petersen

Posted on

Virtual reality has begun its charge to drive technological development for the immediate future. For better or worse, we've seen the backpacks, the new wireless tether agents, the "VR cases," the VR 5.25" panels -- it's all VR, all day. We still believe that, although the technology is ready, game development has a way to travel yet -- but now is the time to start thinking about how VR works.

NVIDIA's Tom Petersen, Director of Technical Marketing, recently joined GamersNexus to discuss the virtual reality pipeline and the VR equivalent to frametimes, stutters, and tearing. Petersen explained that a "warp miss" or "drop frame" (both unfinalized terminology) are responsible for an unpleasant experience in VR, but that the consequences are far worse for stutters given the biology involved in VR.

In the video below, we talk with Petersen about the VR pipeline and its equivalencies to a traditional game refresh pipeline. Excerpts and quotations are below.

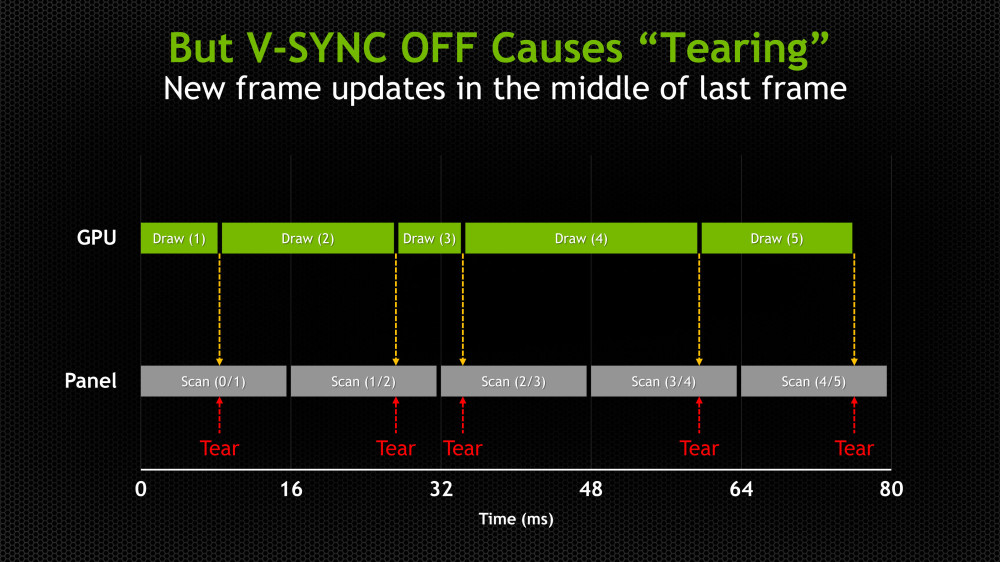

In a traditional pipeline, a frame gets rendered and must be sent to the display for presentation to the user. Ideally, with a 60Hz display, you're generating those frames about every 16ms (or 8ms on 120Hz) to reduce chance of tearing (V-Sync eliminates this). Unfortunately, missing one of those intervals means that, with V-Sync, the previous frame is re-displayed and the user experiences what is colloquially known as a "stutter." We're effectively missing information in this scenario, and must wait until the next refresh interval to find out what happens next. Alternatively, without v-sync, we end up with tearing -- the frame output is chaotic enough that frames get "painted" atop one another, potentially causing vertical "tears" in output. Here's an example:

For a lot of gamers, we've gotten used to this. It's a matter of personal preference, but we do generally find that folks are more willing to endure tears than stutters.

Adaptive synchronization technologies have largely resolved both of these issues, by slaving the display's refresh rate to the GPU's frame output, and that's with G-Sync, Freesync, and Fast Sync.

VR is a little different in its pipeline, but more or less offers the same types of negative effects from a missed interval -- they're just more drastic, because human biology comes into play. Vomiting or loss of balance are a potential side effect from severe enough stuttering.

Of this new pipeline, Petersen explained:

"VR is a little bit more complicated because there's more going on. There's the game, which is the application you're running like Raw Data or something like that, and the game's job is to take physical input, do the simulation, and then to generate a frame. But because it's VR, there's lenses and head motion, so the runtime providers -- folks like Oculus or Valve -- are providing another program that I just call the 'runtime.' The runtime runs in parallel with the game, so the game is rendering a texture that's square, effectively, just like a regular PC game, but then that gets read by the runtime. The runtime does a couple things. It does lens correction, which is making the image work with the lenses in the headset, and it's also doing something called 'late warp' or 'reprojection.' The reasons that's happening is to deal with the fact that there's a fixed 11ms window with a refresh of 90Hz that they're trying to hit all the time.

"Lenses are there to allow your eyes to relax and see the image, but those lenses cause distortion when it's on your [eye], so they actually do undistortion modifying the square images to make them more curved to get ready for the lenses."

The window during which the runtime executes is only a few milliseconds -- it varies based on vendor -- so that final crunch frame is something like 3-5ms. Not much time to get the frame together and complete the image. Petersen expanded on this:

"Since it's 90Hz, there's an 11ms step and it happens over and over. You've got this window, just a small time before that cycle, that you have to be done with that image. The way it works is the game might start rendering, and it's looking at things like taking a headset position and calculating animation, and it might be doing some network stuff, but it's figuring out what's the image that I'm about to put up on the screen. And the next thing that happens is the runtime, that's going to read that texture, and the runtime is going to do things like warping for the lens, or lens warp, and it's also going to do reprojection, which is sort of retiming. It has to get that all done in time for the next refresh.

"[...] That's the way VR is supposed to work. The problem is, let's say your GPU is running slower or you have the CPU get busy. Sometimes the game runs too long or the runtime runs too long. Now you've already missed the next interval. The runtime is still doing its stuff, but it has to make a decision: What does it want to show in the next frame?"

This is where we've got options, just like there are options for more traditional frame output and pacing: Redisplay the old frame, or attempt to update the old frame? In VR, the latter might mean an update to head tracking and look, but could sacrifice animation updates. This choice reduces the chance of wearer sickness by keeping head tracking fluid, but you do lose out on game information.

"The headset manufacturers have a whole software architecture that is effectively defined in the runtime. Think of this runtime as Oculus or Vive's -- it's their secret sauce on how do they give a great experience. But no matter what their algorithm is, they've got a fundamental problem [if] the game did not get a frame rendered in time, but they still have to put something on the screen because your headset's running at 11ms. So they've got a couple different strategies. One is reprojection, where you basically take a frame that was rendered by the game earlier, and modify it to put it on the screen again. You can also just do nothing and take the unmodified frame from last time and reshow it. All of these different defects have different performance impacts.

"If you have tearing in VR, it's an absolutely horrible experience. The first thing is that VR is almost always V-Sync on, and that's hard-wired. What that means is the real decision is what do you do at every refresh interval. If you don't show a new frame, you can either reproject an old frame -- which is kind of synthesizing a new frame -- it's the runtime creating something to show. Or you do nothing. When you do nothing, I call that a 'warp miss.' A warp miss means that you replayed an old frame, just like on desktop when you stutter. Everything stays where it was. When you have a warp miss, the runtime didn't get a frame done in time, so the driver just replays an old frame.

"The other thing it does is what I call a 'drop frame.' The runtime was able to take some version of a prior frame, and then modify it and get that thing out in time using the latest head position. As long as you use a current head position and adjust or reproject the prior frame, you get a reasonably good experience. But the animation in this frame that's reprojecting is actually coming from an older frame, so it looks like a dropped frame from an animation perspective.

"When you're reprojecting frames, it's a better experience than when you're missing frames."

There are a lot of new concepts with VR, but the core idea of frametimes remains largely the same -- it's just that warp misses and drop frames become the new language for describing a poor experience. Stuttering still happens, tearing can happen, we've just got new means of defining those events in VR.

We've got a lot more to learn and distill with VR, but that will come in the future. For now, learn more in the video above, where Petersen whiteboards a VR render pipeline.

Editorial: Steve "Lelldorianx" Burke

Camera: Keegan "HornetSting" Gallick

Video Editing: Andrew "ColossalCake" Coleman