Xbox One X FPS Benchmarks (COD, ACO, Destiny 2), Thermals, & Power Consumption

Posted on

Testing the Xbox One X for frametime and framerate performance marks an exciting step for GamersNexus. This is the first time we’ve been able to benchmark console frame pacing, and we’re doing so by deploying new, in-house software for analysis of lossless gameplay captures. At a very top-level, we’re analyzing the pixels temporally, aiming to determine whether there’s a change between frames. We then do some checks to validate those numbers, then some additional computational work to compute framerates and frametimes. That’s the simplest, most condensed version of what we’re doing. Our Xbox One X tear-down set the stage for this.

Outside of this, additional testing includes K-type thermocouple measurements from behind the APU (rear-side of the PCB), with more measurements from a logging plugload meter. The end result is an amalgamation of three charts, combining to provide a somewhat full picture of the Xbox One X’s gaming performance. As an aside, note that we discovered an effective Tcase Max of ~85C on the silicon surface, at which point the console shuts down. We were unable to force a shutdown during typical gameplay, but could achieve a shutdown with intentional torture of the APU thermals.

The Xbox One X uses an AMD Jaguar APU, which combines 40 CUs (4 more than an RX 480/580) at 1172MHz (~168MHz slower than an RX 580 Gaming X). The CPU component is an 8C processor (no SMT), and is the same as on previous Xbox One devices, just with a higher frequency of 2.3GHz. As for memory, the device is using 12GB of GDDR5 memory, all shared between the CPU and GPU. The memory operates an actual memory speed of 1700MHz, with memory bandwidth at 326GB/s. For point of comparison, an RX 580 offers about 256GB/s bandwidth. The Xbox One X, by all accounts, is an impressive combination of hardware that functionally equates a mid-range gaming PC. The PSU is another indication of this, with a 245W supply, at least a few watts of which are provided to the aggressive cooling solution (using a ~112mm radial fan).

For benchmarking FPS performance, we’re mostly concerned about frametimes, as these will better illustrate what’s going on. As with every other graphics card, the GPU operates with two primary framebuffers that feed the game: The frontbuffer, which hosts the current frame, and the backbuffer, which is preparing the next frame. Consoles and console games heavily lean on Vsync to meet performance thresholds. Vsync is a flip policy that dictates when the pointers to those framebuffers will flip, converting the frontbuffer into the backbuffer (and marking it for overwrite) and the backbuffer to the frontbuffer (marking it for readiness to draw its frame). Many of these console games are still locked to 30FPS, which means that they’re drawing a frame every 33.33ms. If the console is capable of preparing its frame faster than this – and faster than, say, 16.667ms – we may end up with a lucky instance of 60FPS throughput. It’s rare, but does happen; we saw this in Destiny 2 at least three times, where the game would end up with enough computational power to build frames faster than neighbor frames, resulting in 2x the framerate (briefly). We also saw, of course, occasional dips to 15FPS in some titles.

For today, we’re benchmarking Assassin’s Creed: Origins, Call of Duty: WWII, and Destiny 2.

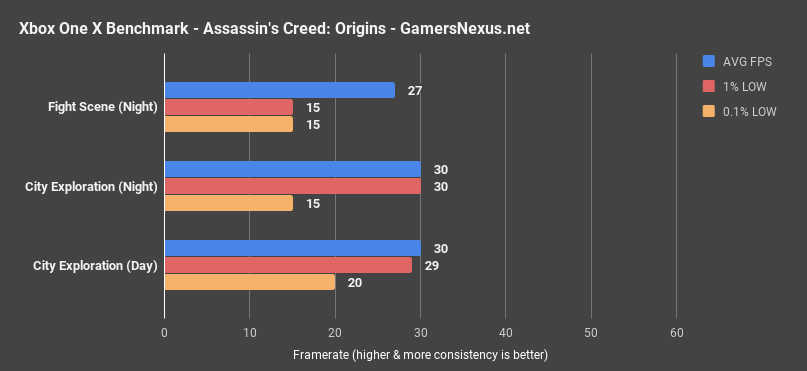

Assassin’s Creed: Origins Xbox One X Benchmarks – 30FPS & Dropped Frames

Starting with Assassin’s Creed: Origins and a standard GN chart, we quickly learned that the game is rendering at 30FPS, despite the console’s claim to being the most powerful console on the market. 30FPS would be a frametime of 33.33ms, and we somewhat regularly dip to 66.67ms, or a drop from 30FPS to about 15FPS. This is reflected in our 1% and 0.1% lowest framerate values against the 2-minute test window, averaging out to 27FPS, with 15FPS 1% and 0.1% lows.

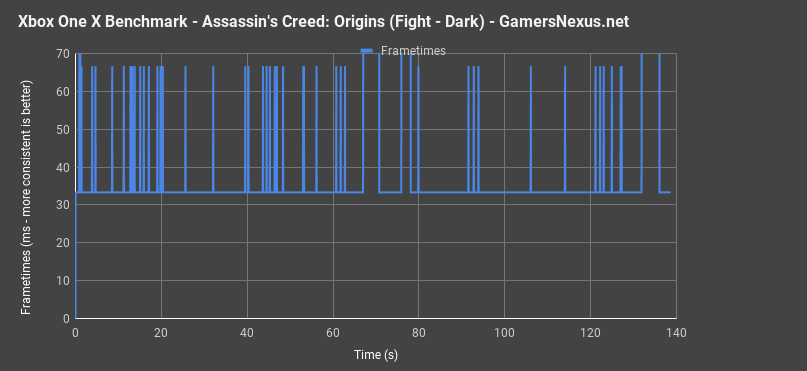

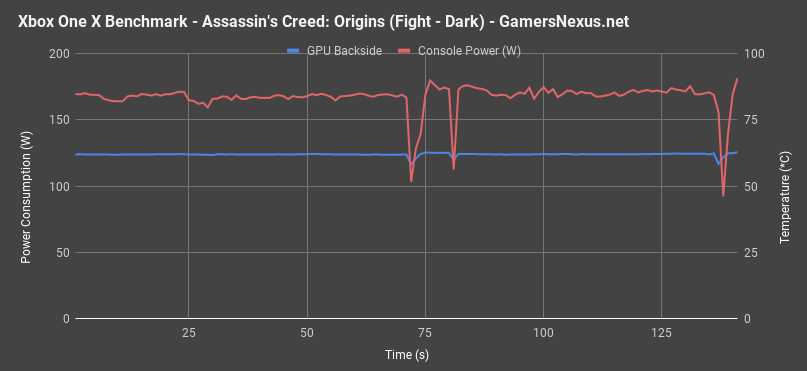

Our next measurements are presented as over time charts. For the video version, we’ll highlight which is which: We have thermal benchmarks, which use a thermocouple mounted to the back-side of the APU, frametime benchmarks, using our new software, and power benchmarks, using a logging plugload meter. These are individually represented in this article, but the video also provides real-time playback of gameplay, making it easier to see how dips/spikes correspond to the same dips/spikes in the graphs.

Also note that our on-screen display has a marker for the present second, which we’ll highlight, and then markers for what’s coming up in the next few seconds and what has past. This historical graph will allow you to look at what’s coming up, then focus on the gameplay footage for any potential hiccups and stutters, and then look back at the graph.

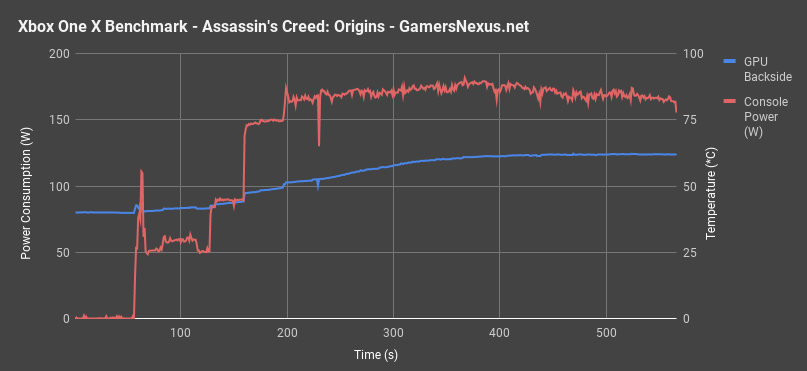

You’ll see that our frametimes are plotted as bouncing between 33ms and 66ms, or 30FPS and 15FPS, depending on the load. To be fair to Assassin’s Creed: Origins, the game does not regularly drop to 15FPS – it seems to primarily do this during combat scenes, but maintains a flat 30FPS during city wandering, exploration, or other non-combat scenes. You’ll see the thermals, power consumption, and framerate plummet whenever we die – that’s because we’ve hit a black loading screen, so nothing is being rendered. This is expected behavior.

The Xbox One X maintains what is roughly a steady state 62 degrees Celsius GPU backside temperature under gaming workloads after our thermal paste improvement, which puts front-side silicon temperature probably closer to 70C. We found that Tcase max is about 85 degrees Celsius before a thermal shutdown event, and we were not able to get the Xbox to ever thermally shutdown. There is no thermal throttling going on here, as no temperature spikes align with framerate drops – it’s just combat creating work.

Power consumption is at around 170W, with a peak load of 181W.

Just to jump back to frametimes for a second, we manually tab through one of the sequences that drops some frames in our video component. See above for that. We should be skipping every other frame, as the game renders at 30FPS and we captured at 60FPS, but every now and then, we’ll get 2 skipped frames. That’s when we get those latency spikes to 66ms, and can be seen commonly in combat. There are a few examples of this during gameplay sequences; they aren’t too common, but common enough to teeter on noticeable, even to console players.

Using some pixel counting, we were able to determine that the captured 1080p output was actually at 1080p, but we haven’t yet tested on a 4K display. We’ll be doing that soon. As of now, though, we’re still dropping frames off of the 30FPS baseline, even with a reduced 1080p output. Assassin’s Creed: Origins simply does not run very well on any device, including PC, and that’s showing to remain true on the Xbox One X. It works, it’s just not even a steady 30FPS. Close, though. Close enough that the drops aren’t fully noticeable, and primarily become visible during heavy combat scenes with numerous actors on-screen.

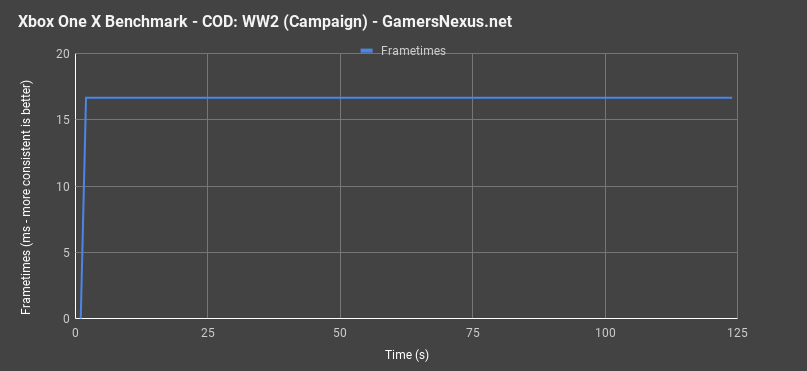

Call of Duty: World War II Xbox One X FPS Benchmarks

For perspective, let’s look at what a perfectly flat framerate looks like on a console.

Call of Duty’s campaign in-game cinematics managed to render a perfectly flat 16.667ms frame latency, which means we’re running at a constant 60FPS for the campaign.

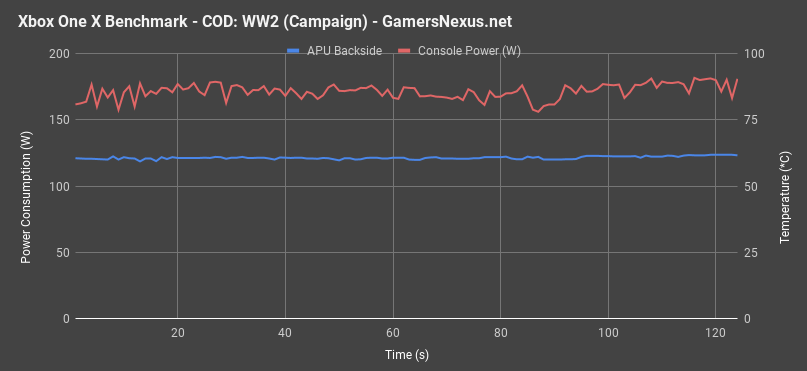

As for thermals, we dither between 60 to 61 degrees Celsius, which means we’ve reached steady-state.

Power consumption jumps around between 157W and 181W, with the average around 173W of power.

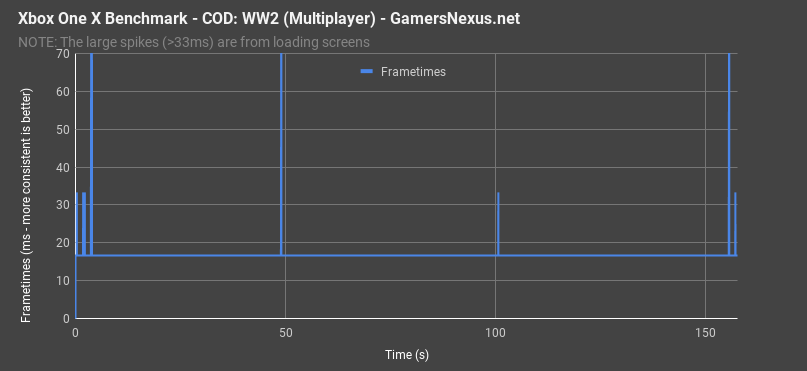

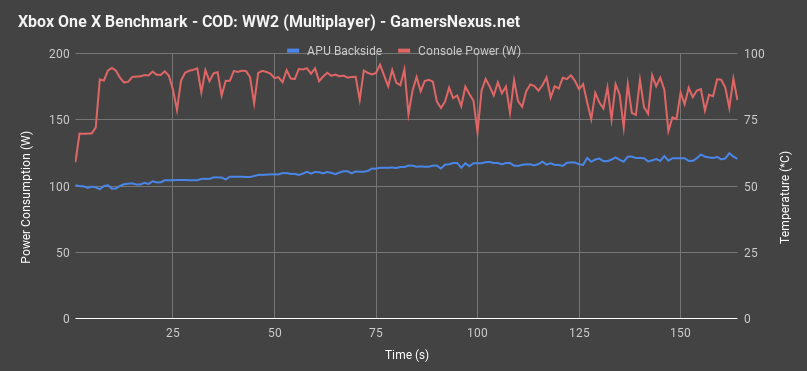

Here’s a multiplayer scene from Call of Duty, also rendering at 60FPS flat. Thermals plot about the same, at around 60-62C peak. Power is also similar to the previous run. The occasional spikes in frame latency, or apparent dips in performance, align with the kill cam. We experience 1-2 dropped frames when transitioning from the kill cam to spawning, which isn’t noticeable during regular play and only becomes apparent when tabbing through each frame. As you can see in our video, it’s right before the kill cam that we get one of those repeated frames, which means the vsync window was missed and so the previous frame was rerendered. Fortunately, it’s not game-critical at this point in the respawn period.

Call of Duty seems to maintain a steady 60FPS in this configuration.

Destiny 2 Xbox One X FPS Benchmarks

We tested three scenes for Destiny 2, but only two will make it into this content.

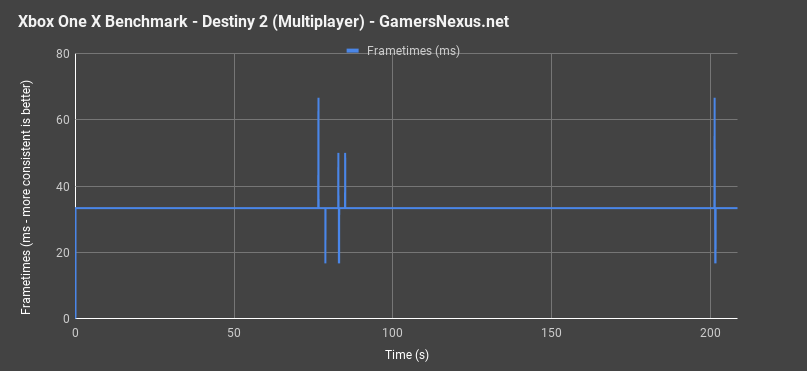

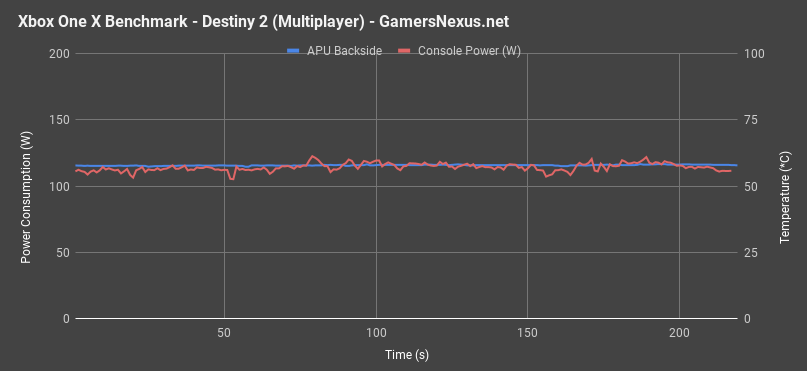

The first is our multiplayer scene: This is the first point at which other players can accompany you in the game, and is one of the bigger battles. It’s also the only scene where we plotted both frametime spikes and drops, with frametimes ranging from 16ms to 66ms. The average was 33.3ms, for 30FPS, with four frames repeating. Of 6300 frames, that’s 0.06% of all frames, so totally insignificant and not noticeable during gameplay. A few frames also render as fast as 16.667ms, oddly, and would be an effective 60FPS – but it only happens three times.

We can tab through a few examples of repeated frames (in the video). Again, they’re not common, and it basically requires manual scrubbing of footage to visually notice them. Still, the Xbox One X does not always offer enough power to render every single frame and hit the V-sync target.

Here's the interesting thing about this chart: Power consumption is around 110W, which means we have plenty of power (~100W) leftover for more processing. The game seems like it could run at 60FPS at least part of the time -- but maybe it just wasn't crossing the threshold enough to make the cut. Odd that so little of the total power is being utilized, here.

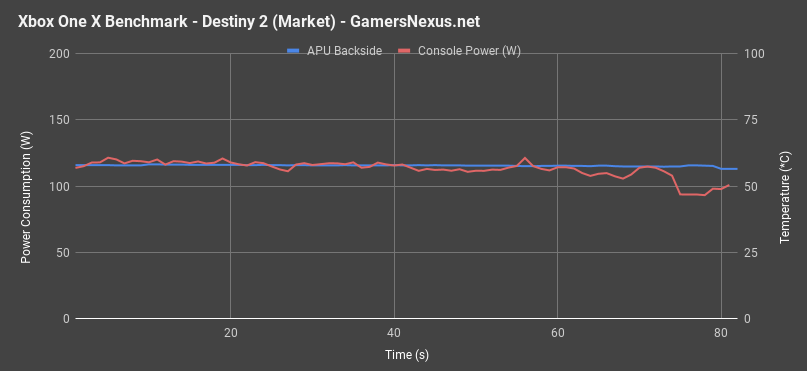

Looking at our next scene, the market, we never encounter any frame latency spikes. It’s a constant 33.33ms for the entire playthrough, giving us an unimpressive 30FPS constantly. The market was the most intensive scene we encountered when benchmarking GPUs on PC, but the Xbox One X is able to hang on through this scene.

Xbox One X Power & Temperature Burn-In

Above is a look at power and thermals from an idle state, which ramps into a burn-in. There’s no capture footage for this. Note also that we’re using our new thermal paste application, not the stock stuff – so it’s going to be better, as the stock paste is quite bad.

With the console powered off and in an effective sleep state, the device pulls between 0 and 2W from the wall. As we ramp into an Assassin’s Creed: Origins workload, we see the initial power-on spike at 110W, then about 60W of draw during the menu, then steps all the way up to 170-180W of draw in the game.

Thermals during this time are plotted as ramping from 40 degrees Celsius immediately upon power on, which then slowly ramps to 62 degrees for the backside APU temperature.

Conclusion & Preventing Xbox One X Overheating

First off, the Xbox One X dumps heat out the back. Its cooler is a glorified reference blower design for a GPU, except an order of magnitude larger. We found that, in its “instant on” mode, the console was burning a constant 0-2W, and somehow managing a constant ~50-52C when “off.” That is to say, the console was told to shut down, but would keep operating at high enough power loads to generate 50-52C readings on our rear-mounted thermocouple. In a room with an ambient of 26-28C, this is clearly impossible without some processing going on actively (a truly “off” device would be room temperature, after time to reach steady state). All that’s to say that users of the Xbox One X should be positioning it in open air environments. Don’t cram the thing into a cabinet or other tight quarters – it’ll run hot, and it won’t age well.

As for gaming, Assassin’s Creed: Origins is the worst example of dropframes. The game manages to repeat several of its in-combat frames and, although not always noticeable to the player, the more intensive combat scenarios can start to feel ever-so-slightly stuttery. It’s not much, but it’s there. The game holds 30FPS, otherwise.

Call of Duty: World War II is flawless in its frame deliver. The only dropframes are between kill cam/spawn cycles, and are not only irrelevant to player experience, but unnoticeable. The game also outputs at 60FPS constant.

Destiny 2 largely holds a 30FPS average, but sometimes jumps between 15FPS, 30FPS, and 60FPS – it just depends on the scenario. The 60FPS cycles mean that we get two back-to-back frames in our video playback, whereas the 30FPS cycles are represented by the usual alternating frame output. Drops skip an additional frames. For the most part, it’s difficult to get this game to drop frames.

The Xbox One X is exceptionally well-built for its overall assembly, and is the closest console to a PC that’s yet been released. That said, don’t expect 60FPS in every title – two of three tested run at 30FPS. We’ll do more testing on this going forward. Remember that this is GN’s first serious attempt at getting into console FPS benchmarking, so we have much ground to gain.

Editorial, Testing: Steve Burke

Research & Development: Patrick Lathan

Video: Andrew Coleman