System building is an exercise in both education and enthusiasm -- as the core principles of system assembly become second nature, the enthusiast approach to commanding more power from hardware is the next fitting evolution. Overclocking is a part of this process.

In this primer to overclocking, we'll provide the fundamentals of overclocking, discuss the principles behind it, and cover what, exactly, overclocking is and what it nets you (and supply a brief tutorial). This is the ultimate in top-level guides, so those who have a firm grasp of the basics may not find any new information herein; with that said, the goal of this primer is to provide a solid foundation for your future overclocking exploits. You will not find CPU-specific OC advice in this guide, but can certainly ask for it below or in our forums!

Let's dive in.

What is Overclocking?

Luring more kick out of your components - primarily the CPU, RAM, or GPU - by amping up multipliers, base clock rates (BCLK), voltage, and other aspects of the targeted components. Overclocking has the great ability to effectively create or unlock a better product with a bit of time and some key strokes. Some CPUs will have locked settings (such as the capped multipliers in non-K series Intel CPUs), but they can normally still be pushed at least slightly beyond their stock settings, even if it requires third party software or unlocking motherboards. The reasoning for "locked" components is explained below.

Why is Overclocking Even Possible?

To achieve the best yield-per-wafer (read about the silicon die process here), chip manufacturers determine the most stable speeds of the chip currently being fabricated. To use memory as an example, Kingston previously explained their extensive burn-in process which bins-out memory chips by their highest stable speeds (1600MHz, 1866MHz, etc). Fewer chips in this process will bin-out as natively higher speed modules as a result of decreased reliability at higher frequencies. As the price of the chip is largely dictated by the die yield per wafer (more dies successfully sliced per wafer equates more supply and lower prices), companies aim to output more dies at 'safer' specs. By doing this, the companies are opting for an overall more reliable product at the expense of speeds, which are quite possibly lower than their fullest potential. That's where you come in as an overclocker - unveiling the full potential of your chip.

A silicon wafer! This is where your CPU dies are sliced.

A silicon wafer! This is where your CPU dies are sliced.

It's all about manufacturing toward the lowest-common (stable) denominator, and ensuring the largest percentage of stable products for the end-user.

This is why overclocking can happen: Chances are, the silicon dies behind your CPU, memory, or GPU have sizable levels of overhead in their performance output, but until you unlock that overhead and push the chip to its highest stable capabilities, this overhead goes untapped.

It's highly likely that the very chip you're operating on right now is capable of higher performance levels, but was binned-out to the lowest stable specification determined by the factory.

If you want the most headroom for overclocking, be sure to look into overclocking-ready or unlocked CPUs (Intel uses the "K" to demarcate maximum overclocking ability per model; AMD's 'black edition' CPUs have traditionally had great flexibility, although we've found that even AMD's entry-level CPUs have reasonably OC capabilities).

When is overclocking useful? Why should I overclock my PC?

The most important question in the entire process is whether or not overclocking is "worth it" to you. Hopefully, if only because it's fun to play with hardware, the answer is an easy 'yes,' no matter its practicality.

Every ounce counts when performing intensive tasks (transcoding/rendering featured here).

Every ounce counts when performing intensive tasks (transcoding/rendering featured here).

There are many practical uses for overclocked components, however fun it may be to simply play with hardware, so let's go over a few of those:

Faster Processing: This is particularly important for anyone utilizing multithreaded applications (compilers, encoders, renderers). Some programs, such as those that render edited videos, don't suffer from the same utilization limitations that the common game does; where a game can only use so much of a CPU's offerings (as opposed to video hardware), rendering applications will take as much CPU and memory as they can get. The faster the hardware, in these instances, the faster encoding tools can transcode or render video output. It is likely that the limiting factor will be a magnetic hard disk drive, but that's a different subject. Premiere video editing software suites will also make use of video hardware for the number-crunching capabilities, but it never hurts to amp up the usable clock speed of any hardware handling intense applications.

Increased Usable Life: As components near the end of their usable life for your purposes, there's not much that can be done to prevent their inevitable disuse or replacement. Overclocking won't counter the impending requirement for replacements, but it can fend off those looming expenses for just slightly longer. This is under the assumption, of course, that other significant hardware advances (which have undoubtedly been made by the time you're desperate to extend usable life) are not required in daily use. DirectX is an example on the GPU end -- no matter how far you push your GPU, no matter how many aftermarket heatsinks you solder to the chip, it's not going to be able to run iterations of Dx that it does not natively support.

With this said, a good CPU can last years upon years. Once things start to slow down (or once you have a desire for more), slap a sturdy and re-usable aftermarket cooler to the processor and amp up the speeds until satisfied. Please keep in mind that over-volting your CPU can result in the opposite of increased usable life -- an early demise.

Is overclocking useful for gaming?

This is a tough question to answer -- the viability of overclocking as a medium through which greater gaming support can be achieved is largely dependent on a few key factors: The game's ability to recognize and employ new speed advancements and the type of overclocked component.

A GPU that is pumped-up a bit through EVGA Precision or MSI Afterburner may help push your graphics settings from "medium-high" to "high," if you've been sitting on the razor's edge between the two. Turning a 2.8GHz CPU into a 4GHz CPU will not produce linear improvements in gaming quality for most games.

Some games, like Civilization and the Total War series, are more CPU-intensive than their peers, and so it is likely that bolstered CPU performance will yield a more noticeable enhancement than in, for instance, TERA (as one of our users noted) or Battlefield (both of which favor the GPU more than many other games, from our testing).

As lame as it may be, the answer here is "it depends." It depends on the game, on the hardware involved, the age of the hardware, whether or not newer technologies (shader technologies, DirectX, caching systems, filtering protocols, etc.) are required and present, and many other factors. Feel free to post on our forums for in-depth support on a per-game basis!

Continue on to Page 2 for our overclocking videos and for the "how-to overclock your CPU or GPU" section.

OK, OK -- I get it. How do I overclock my CPU, GPU, or memory? What's the methodology?

While this guide won't go into numeric specifics for overclocking your components (primarily because this varies based on each hardware configuration), we can provide a firm starting ground to help you understand what you're looking at.

Additionally, you may want to look into an aftermarket heatsink for more extreme overclocking.

We've provided a video to help speed up your understanding, though you'll get more depth in the remainder of the article:

If you're looking for more info on GPU overclocking's fundamentals, check out this video:

Core Concept - CPU Overclocking, Voltages, Multipliers, vCore, Clock Speeds

Overclocking's fundamentals remain largely constant, regardless of the component you're working with. At the end of the day, the objective is to make things faster -- modern BIOS interfaces (along with its newer UEFI and Visual BIOS counterparts) are equipped with guided overclocking features. MSI's OCGenie and ASUS' AI Tweaker are good examples of this, but those don't often achieve the level of accuracy and max-performance-to-stability that manual tweaking will.

If we look at a more contemporary example, here's what your standard 4GHz Ivy Bridge CPU would look like:

I'll primarily summarize CPU overclocking since it's generally the most desired. GPU overclocking is the simplest and is covered in the video above.

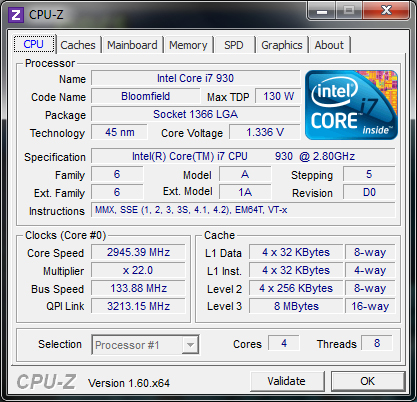

If you read our Benchmark Your PC Like a Pro guide, you've heard of CPU-Z. If not, go grab it now. It's quick to install, free, and indispensable for system diagnostics. You'll need this in a moment.

All microprocessors operate on what we see as their base clock rate (BCLK). CPU-Z will cite this metric as the "Bus Speed," found in the lower-left area of the "Clocks" sub-section. A very brief overview of microprocessing / silicon chip architecture will reveal that there are internal and external clocks for CPUs; the internal clock is the product of the multiplier with the external clock. The external clock is what CPU-Z identifies as the 'Bus Speed,' or aforementioned base clock.

It's a bit more complex than this, of course, but for purposes of keeping this guide as the promised top-level primer, we'll boil it down to this simplified and practical equation:

External Clock Rate * CPU Multiplier = Outputted Core Speed ("Internal Clock Rate")

Let's take my First Generation Intel i7-930 Nehalem CPU as an example.

In this screenshot of my CPU-Z readout, we see that the CPU I'm using reports its bus speed as 133.82MHz. My multiplier is x22. 133.82MHz * 22 will roughly equate the core speed we see listed above the multiplier (the operating frequency), which computes to ~2945MHz (2.945GHz). This will vary based on CPU utilization with Turbo Boost functionality.

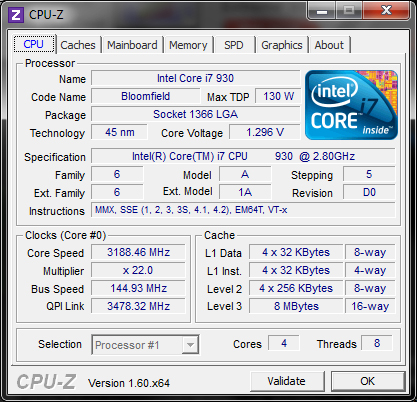

Now, with some changes, you can see in this screenshot I've very slightly increased the base clock to 145MHz (the base clock was the 1st Gen i7's most dominant OC metric); multiply the new 145MHz BCLK * the existing 22x multiplier (the max multiplier on this chip), and we now have an operating core speed of roughly 3189MHz, or ~3.2GHz. This is the way you baby-step into the bigger OC leagues.

A note on Ivy Bridge CPUs: You will find that increasing multipliers is the best way to maintain stability while achieving faster processing speeds. Nehalem architecture is now dated, and Intel has shifted focus on keeping lower base clock speeds (for lower heat and power consumption), but since multipliers can now easily push into the 40s, you've got quite a bit of room to play.

AMD CPUs still use HT overclocking on top of their CPU ratio/multiplier overclocking.

Here's where we use that knowledge:

Increasing the multiplier will increase the core speed, but risks destabilization.

However, in my case - and in the case of several other (locked) modern CPUs - the multipliers are capped fairly low. If you find yourself hitting a multiplier wall, it may be possible to push the operating frequency beyond the multiplier limitation by additionally increasing the BCLK (base clock). This can cause great instability and potentially overheating issues, so please seek out advice on your specific CPU before doing so.

As you modify your multiplier (do it in slow steps - and don't do it until you read our disclaimers below), you'll see the core speed increase. At some point in this process, an overly ambitious multiplier (and/or base clock) will likely result in a system 'freeze' or thermal shutdown. This is for a few reasons, but the most obvious of which is heat. A faster CPU works harder and will generate more heat, requiring a larger or more tuned CPU cooler/heatsink (liquid cooling or, in extreme cases, liquid nitrogen should do the trick - because we all have tanks of that lying around).

The thermal shutdowns are to protect the CPU and should not be catastrophic. Just make sure you don't get too ambitious -- adjust everything in steps, whether that's the voltage, BCLK, or multiplier. Do it all in small increments.

Pushing the Limits of the Multipliers/BCLK with Increased Voltage

How, then, do you push past the unstable multipliers and base clocks? Well, once you've discovered the threshold at which your system becomes unreliable, you can tweak voltage settings to increase stability and potentially attain higher core clock frequencies. Don't push it too hard, though, for that could result in premature death of the chip.

Voltage is the next driving factor in our guide: Processors aren't fabricated equally and will have varying degrees of reliability when placed under high multipliers and high voltages. A CPU can exhibit a catastrophic failure when placed under a load it is not capable of sustaining, so please, overclock responsibly. That said, adjusting a processor's vCore voltage will stabilize its performance, permitting still higher multipliers (unless you've hit the cap, in which case your die must be ultra-stable!).

Tweak the multipliers first - one at a time - and locate a reliable core speed. Determine where the threshold for failure is; you will need to run stability tests (below) to properly locate this threshold. Simply running a system will not out the potential underlying issues.

After running stability tests (again, below), if necessary, increase voltage to accommodate your new CPU frequency/speed.

Before we move on, it is important to note that you should increase the RAM's frequency along with the CPU's frequency -- if the RAM can't keep up with the CPU, you'll be burning/wasting cycles of the CPU with zero gain.

Overclocking Limitations

As briefly covered above, and explained with great care in this 2006 Overclockers' Club post, there are two tangible limitations when working with CPUs: Heat and voltage.

Too much heat will result in volatility, thermal shutdowns, and can potentially damage the chip (although modern motherboards and CPUs will often disable a system before major damage occurs). Too much voltage, though, will in turn generate more heat and, by nature of sending more power through the chip, can trigger a catastrophic, unrecoverable failure in the CPU, similar to what might be seen in a large electrostatic discharge from the user.

Provided adequate cooling is present, you should be able to overclock to a commendable level without overdosing on voltage.

Stability and Testing

You won't always be able to immediately notice volatility after overclocking -- the best way to trigger a contained failure is with benchmarking and stress-testing software. We've covered this in depth in a previous guide, so I'll direct you there for further details. The tools you're most interested (covered in the linked guide) will be CPU-Z, Prime95, and Memtest86+. FurMark is an excellent choice if testing GPU stability.

Follow the CPU section (utilizing Prime95 with maximum torture threads) for best results. You'll probably want to run maximum torture threads on both Small FFTs and Large FFTs (separate test runs) for ~4 hours each. If you feel like you have the time, run it for 8 overnight, then run Memtest86+ or the Blend test for a few hours (separately).

Useful Tools

Some quick links to overclocking tools and utilities that we find useful:

CPU Tools:

- CPU-Z: http://www.cpuid.com/softwares/cpu-z.html

- Prime95: http://www.mersenne.org/freesoft/

- CoreTemp: http://www.alcpu.com/CoreTemp/

GPU Tools:

- GPU-Z: http://www.techpowerup.com/gpuz/

- Prime95: http://unigine.com/

- MSI Afterburner: http://event.msi.com/vga/afterburner/download.htm

- EVGA Precision: http://www.evga.com/precision/

Final Thoughts, Warnings, and Disclaimers

No matter the CPU, overclocking will effectively void your warranty. Please keep this in mind: You will not be able to request a warranty replacement in the event you have triggered an unrecoverable failure in your CPU.

Please monitor your temperatures closely when overclocking. As good as modern software and firmware can be, they're not always 100% reliable and they can't always trigger a thermal shutdown before the damage is dealt. We recommend using hardware thermal probes for the most accurate read-outs, though software is also available for these purposes (again, discussed in the benchmarking guide - CoreTemp will be one of the most important tools).

If you have any questions at all - any - please post them in the comments below or request in-depth assistance on our forums. Overclocking is a fun endeavor and will educate you on system stability, pushing your build to its limits, and furthering your success as a system builder. Given the right tools and enough patience, you shouldn't be afraid of a total collapse of your cores -- ask us for CPU-specific advice, follow the above examples, and proceed in small increments and you'll be just fine.

Enjoy!

Have feedback for us or think we missed something important? Comment and I'll have it added.

- Steve "Lelldorianx" Burke.

Thanks to Kingston, Intel, and EVGA for providing additional clarification on these items.